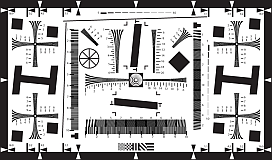

Infrared SFRplus test charts available now

Slanted Edge Noise reduction (Modified Apodization Technique)

For measurement of sharpness, the main driver of variation is noise. A powerful noise reduction technique called modified apodization is available for slanted-edge measurements (SFR, SFRplus, eSFR ISO and SFRreg). This technique makes virtually no difference in low-noise images, but it can significantly improve measurement accuracy for noisy images, especially at high spatial frequencies (f > Nyquist/2). It is applied when the MTF noise reduction (modified apodization) checkbox is checked in the SFR input dialog box or the SFRplus or eSFR ISO More settings window.

Note that we recommend keeping it enabled even though it is NOT a part of the ISO 12233 standard. If the ISO standard checkbox is checked (at the bottom-left of the dialog boxes), noise reduction is not applied.

The strange word apodization* comes from “Comparison of Fourier transform methods for calculating MTF” by Joseph D. LaVeigne, Stephen D. Burks, and Brian Nehring, available on the Santa Barbara Infrared website. The fundamental assumption is that all important detail (at least for high spatial frequencies) is close to the edge. The original technique involves setting the Line Spread Function (LSF) to zero beyond a specified distance from the edge. The modified technique strongly smooths (lowpass filters) the LSF instead. This has much less effect on low frequency response than the original technique, and allows tighter boundaries to be set for better noise reduction.

*Pedicure would be a better name for the new technique, but it might confuse the uninititiated.

Modified apodization: original noisy averaged Line Spread Function (bottom; green),

smoothed (middle; blue), LSF used for MTF (top; red)

Microsoft Lync Logo selects Imatest

Imatest is the standard test software used in the recently published Microsoft USB peripheral requirements specification entitled “Optimized for Microsoft Lync Logo”, which can be downloaded here.

Region Selection bug workaround

Symptoms of problem:

Upon selection of a region of interest, program stops working, either not responding or crashing.

DOS window error messages:

- Operation terminated by user during pause (line 21) In newROI_quest

- Undefined function or method ‘figure1_KeyPressFcn’ for input arguments of type ‘struct’.

-

Error while evaluating figure KeyPressFcn – Segmentation violation detected

Source of problem:

automatic translation software such as 有道首页 (Youdao Dictionary) and 金山词霸 (PowerWord)

Solution to problem:

Temporarily disable the translation software while performing a ROI selection

We will be working to make our software compatible with these sorts of translation programs in the future, as well as improving our own internationalization. Sorry for the inconvenience.

Measuring Test Chart Patches with a Spectrophotometer

Using Babelcolor Patch Tool or SpectraShop 4

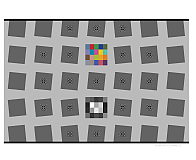

This post describes how to measure color and grayscale patches on a variety of test charts, including Imatest SFRplus and eSFR ISO charts, the X-Rite Colorchecker, ISO-15729, ISO-14524, ChromaDuMonde CDM-28R, and many more, using a spectrophotometer and one of two software packages.

- Babelcolor PatchTool which works with reflective test charts

- Robin Myers SpectraShop 4 which works with both reflective and transmissive (backlit) test charts

Measurement results are stored in CGATS files, which can be used as reference files for grayscale and color chart analysis in Multicharts, Multitest, Colorcheck, Stepchart, and SFRplus. In many cases, custom reference files provide more accurate results than the default values.

In addition, Argyll CMS is a free, Open Source, command line-based package that can be used for a number of measurements, including illumination intensity and spectrum. See the Argyll CMS documentation for more details.

Spectrophotometer

At Imatest we currently use the i1Basic Pro 2 (a good choice if you’re shopping for a new instrument; ). We’ll call it the i1Pro in the text below. It’s one of several instruments supported by Babelcolor PatchTool and Robin Myers SpectraShop 4 software. The i1Pro is primarily designed for reflective readings, but it can also be used to measure transmissive (backlit) charts.

- If you don’t have the original disk, Load the drivers and software from X-Rite. For our i1Pro we clicked on Software downloads (5 Items) (on this page), and loaded all relevant software. For the i1Basic Pro 2, go to this page.

- Install the drivers for your system and i1Diagnostics. Run i1Diagnostics to make sure your hardware is functioning properly.

- The other programs for the i1Pro are useful for purposes such as monitor calibration, but not directly relevant to chart calibration/patch measurement.

Babelcolor Patch Tool

Read the description of PatchTool on the Babelcolor page, then download and install it. You’ll need to purchase it in order to run Patch-Reader (no luck with evaluation mode). Registration is straightforward (no special instructions needed).

- Open PatchTool. The window is very simple— not much to it.

- Click Tools, Patch-Reader… to open the Patch-Reader…

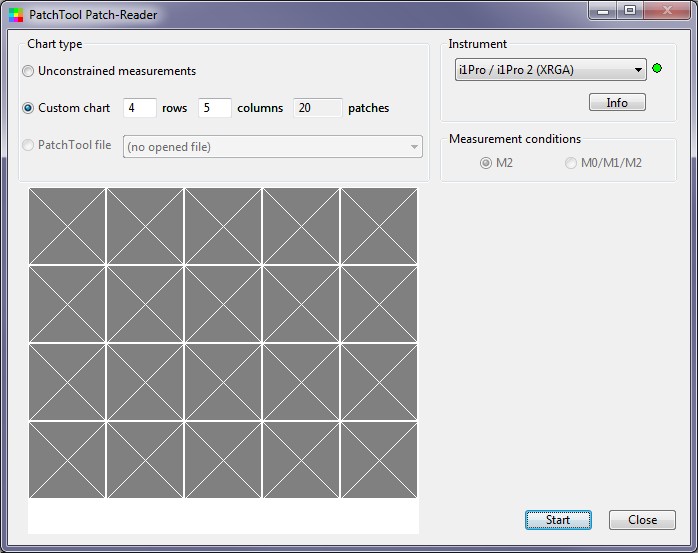

- Select the Chat type. Click Custom chart for rectangular grid charts (like the X-Rite Colorchecker), and select the correct number of rows and columns. The default is 4 rows, 6 columns (for the Colorchecker). Select 4 rows, 5 columns for SFRplus color and grayscale charts. Unconstrained measurements work perfectly well for rectangular grid charts and must be used for charts that don’t have a regular rectangular grid (like the ISO-15739, CDM-28R, etc.). The Patch-Reader window will look like this.

Initial Patch-Reader window (4 rows, 5 columns)

Initial Patch-Reader window (4 rows, 5 columns)

- Press Start. A window opens with the following message for calibrating the spectrophotometer. Follow instructions and press .

| REFLECTANCE CALIBRATION: Remove the AMBIENT DIFFUSER if installed. Place the i1Pro on its BASE (white tile). When ready, press the ‘Return’ or ‘Enter’ keys or close this message window.NOTE: This calibration may take several seconds!

|

- After you finish the calibration, and buttons appear on the lower-right of the Patch-Reader window.

- Place the i1Pro on the first patch of the chart and click the button on the side. This is equivalent to pressing (which should never be necessary).

- Now go through the patches one-by-one, by rows (for rectangular charts). For non-rectangular charts you’ll need to know the patch order, which is described below. For rectangular grid charts the Patch-Reader window will displays the location of the next patch to measure. Be sure to position the i1Pro carefully over the center of the patch and press it firmly against the target to minimize stray light. (Subdued room light doesn’t hurt.) (For a m row x n column chart, the order is Row 1-Col 1, Row 1-Col 2, … Row 1-Col m, Row 2-Col 1, etc.)

| A little inconsistencyAlthough you enter patches by rows (R1C1, R1C2, …, R1Cn, R2C1, … R2Cn, …) at Patch-Reader’s prompting, the patches in CGATS files (created using Custom chart: m rows x n columns) are stored by columns: (R1C1,R2C1, …, RmC1, R1C2, …, RmC2, …). This is NOT consistent with CSV files, which are stored by patch order, i.e., rows (R1C1, R2C2, …R1Cn, R2C1, … R2Cn, … for rectangular charts). Imatest recognizes the file type (CSV or CGATS) and interprets the patch order correctly (using the LGOROWLENGTH setting in CGATS files). |

- You can use the mouse to select a patch to measure, i.e., you can go back and re-measure if you have any doubts about measurement quality.

- When you have finished the Patch-Reader window will look like this. You should examine this image carefully to be sure it resembles the chart— all patches should look correct: You can still select patches to re-measure if necessary.

Patch-Reader window after all patches have been read

Patch-Reader window after all patches have been read

- Click , and the a window appears with the following options.

| Do youl want to Save these measurements?Click ‘Cancel’ to continue making measurements.

|

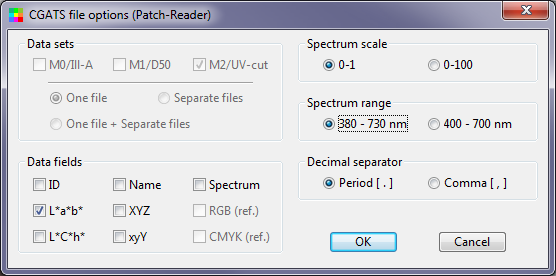

- When you click , the following window appears. For typical Imatest use, only the L*a*b* data field needs to be checked. ID (SAMPLE_ID; the numeric sequence) and Name (SAMPLE_NAME; The alpha column and numeric row, i.e., A1, …, A4, B1, …, F4) may be checked, but will be ignored by Imatest. XYZ and xyY may be checked, but will be ignored if L*a*b* is checked. (The order of precedence in reading data is L*a*b*, XYZ, then xyY.)

- The save file name should include the media type (matte, luster, …) and any other information needed to identify the chart.

PatchTool writes files in CGATS format (an industry standard best explained in the PatchTool Help PDF document). Imatest can read CGATS files starting with August 2013 3.10 builds. Here is an example:

CGATS.17 ORIGINATOR "BabelColor PatchTool, version 4.1.0 b321" LGOROWLENGTH 4 CREATED "2013-07-01" # Time: 1:25:46 PM INSTRUMENTATION "i1Pro" INSTRUMENT_SN "608994" MEASUREMENT_SOURCE "Illumination=D50 ObserverAngle=2 WhiteBase=Abs Filter=UVcut" ILLUMINATION_NAME "D50" OBSERVER_ANGLE "2" FILTER "UVcut" MEASUREMENT_CONDITION "M2" WEIGHTING_FUNCTION "ILLUMINANT, D50" WEIGHTING_FUNCTION "OBSERVER, 2 degree" KEYWORD "DEVCALSTD" DEVCALSTD "XRGA" # # THIS FILE CONTAINS REFLECTANCE MEASUREMENTS MADE WITH PatchTool Patch-Reader # # Chart_type: "Custom chart" # No_rows: 4 # No_columns: 5 # No_samples: 20 # NUMBER_OF_FIELDS 3 BEGIN_DATA_FORMAT LAB_L LAB_A LAB_B END_DATA_FORMAT NUMBER_OF_SETS 20 BEGIN_DATA 44.050 -4.479 -15.534 46.989 3.638 -16.591 38.129 6.395 6.321 52.539 -0.730 -3.700 40.766 32.354 14.498 42.772 -19.174 -21.994 57.309 12.668 12.012 53.507 1.771 3.460 47.533 -25.359 16.443 44.629 32.242 -6.113 61.667 -18.258 37.479 37.817 2.403 -27.741 33.075 4.416 -29.699 75.079 4.192 62.638 63.637 15.573 46.478 45.767 28.423 10.312 41.007 -8.057 10.701 59.762 -28.214 -1.841 55.213 23.810 34.126 33.661 10.341 -10.492 END_DATA

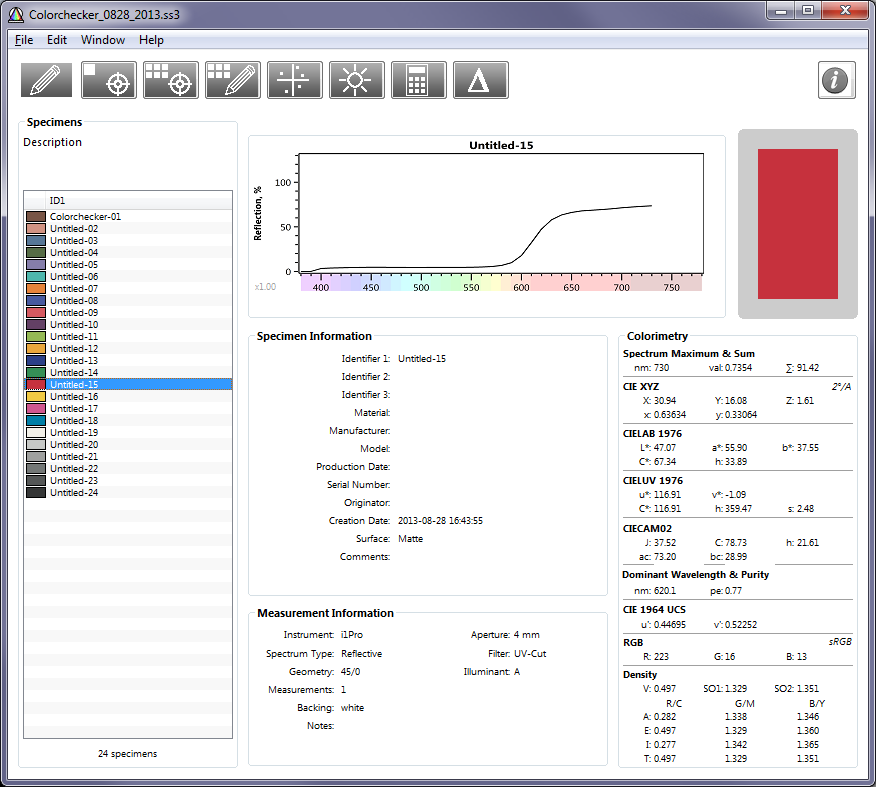

SpectraShop 4

Download and purchase SpectraShop 4 using links at the bottom the product description page. Both Windows and Mac versions are available. The brief Tutorials are recommended. You should also download the full PDF manual and look at the FAQ, which has troubleshooting information. Open SpectraShop 4. Click on the Measure specimens box, which is the second from the left. (I’ve tried Create and edit charts and Measure chart without luck.)

|

This opens the Instrument Connection window, shown on the right. Select the spectrophotometer, click , select the Specimen Type (reflective, transmissive, etc.). Be sure the spectrophotometer is on its base (with white tile), then press . Instrument Connection window, shown just before Calibration |

|

|

After Calibration is complete, the Measure Reflective Specimens page will open. None of the settings are critical, but we recommend

When you have finished making settings, press . Place the spectrophotometer sensor over the patch. If possible the room should be dimly lit to minimize light leakage. Take each reading by clicking the spectrophotometer button— waiting about two seconds for a beep indicating that the reading is complete. Patch order is described below. Take readings carefully. I haven’t (yet) found a convenient way to edit or redo erroneous readings. Saving the reference fileI recommend saving results in two formats: the default SpectraShop 4 ss3 format (not interchangeable, but can be reopened in Spectrashop 4), which contains all results (including the spectrum), and CGATS with just the results used by Imatest. To save the SS3 file, click (in the window shown below) File, Save… or Save as… The procedure for saving Imatest-readable CGATS files is shown below. |

|

Main SpectraShop 4 window showing Colorchecker results

Main SpectraShop 4 window showing Colorchecker results

Spectrashop 4 Export window (for writing CGATS files)

To save the measurements in an Imatest-readable CGATS file, click File, Export… The window on the right opens. Select CGATS 1.7 ASCII as the Export Format. Spectrum should be unchecked and L*a*b* should be checked. XYZ and xyY may be checked if desired, but they will be ignored by Imatest if L*a*b* has been checked. Here are the results:

CGATS.17 ORIGINATOR “” CREATED “2013-08-28 16:43:55” INSTRUMENTATION “i1Pro” MEASUREMENT_GEOMETRY “45/0” MEASUREMENT_SOURCE “A” WEIGHTING_FUNCTION “OBSERVER,2 degree” WEIGHTING_FUNCTION “ILLUMINANT,A” NUMBER_OF_FIELDS 4 BEGIN_DATA_FORMAT SAMPLE_ID LAB_L LAB_A LAB_B END_DATA_FORMAT NUMBER_OF_SETS 24 BEGIN_DATA “Colorchecker-01” 39.80 14.43 17.09 “Untitled-02” 67.86 22.51 20.21 “Untitled-03” 48.51 -9.36 -23.27 “Untitled-04” 43.04 -10.81 20.67 “Untitled-05” 54.88 5.55 -24.67 “Untitled-06” 68.04 -32.15 -6.00 “Untitled-07” 66.70 34.88 63.83 “Untitled-08” 38.48 -0.44 -45.06 “Untitled-09” 56.15 47.12 25.25 “Untitled-10” 31.91 17.66 -16.69 “Untitled-11” 71.40 -16.98 50.80 “Untitled-12” 74.84 21.00 71.57 “Untitled-13” 27.04 0.66 -49.03 “Untitled-14” 52.88 -32.25 23.97 “Untitled-15” 47.07 55.90 37.55 “Untitled-16” 83.99 9.76 78.29 “Untitled-17” 55.75 47.38 -5.30 “Untitled-18” 47.53 -31.32 -35.23 “Untitled-19” 95.01 0.32 4.03 “Untitled-20” 80.78 -0.72 0.38 “Untitled-21” 66.36 -0.71 0.28 “Untitled-22” 49.81 -1.43 -0.39 “Untitled-23” 36.00 -0.85 -0.45 “Untitled-24” 20.92 -0.34 -0.53 END_DATA

Patch order

Charts with a regular m x n rectangular grid have patches numbered in a sequence shown in the example below for the 4 row X 6 column X-Rite Colorchecker. This is the order you should use in acquiring patch data.

| 1 | 2 | 3 | 4 | 5 | 6 |

| 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 |

Several grayscale charts (ISO-15739, etc.) that don’t have regular m x n grids have their patch order listed in Special and ISO Charts. These charts are supported by Multicharts.

Charts supported by Multicharts. You can find the patch order by running Multicharts, selecting 3. Split Colors: Reference/Input (in Display), then checking the Numbers checkbox. This displays the patch numbers, as shown below for the DSC Labs CDM-28R.

Multicharts result, showing patch numbers, for the ChromaDuMonde 28R.

Multicharts result, showing patch numbers, for the ChromaDuMonde 28R.

| There are a few special cases, such as the Rezchecker (a tiny precision reflective chart for measuring color, tones, and MTF). The numbering follows the standard rectangular grid, but patches that have L* = a* = b* = 0 in their reference file are omitted from analysis. For the Rezchecker these include patches 1, 6, 8-11, 14-17, 37, and 42. |  Rezchecker Rezchecker |

Rezchecker numbering Rezchecker numbering |

|

Some cases are not obvious, like the eSFR ISO color patches, which are supported by Multicharts, but usually run in eSFR ISO. eSFR ISO color patches |

|

|

External Links

- Edmund Ronald’s Blog

- i1Basic Pro UVcut – predecessor to the i1Basic Pro2

Sharpness and Texture Analysis using Log F‑Contrast from Imaging-Resource

Imaging-resource.com publishes images of the Imatest Log F-Contrast* chart in its excellent camera reviews. These images contain valuable information about camera quality— how sharpness and texture response are affected by image processing— but they need to be processed by Imatest to reveal the important information they contain.

*F is an abbreviation for Frequency in Log F-Contrast.

I was motivated to write this post by my curiosity about the 36-megapixel mirrorless full-frame interchangeable-lens Sony Alpha A7R and how its image quality compares to competitive cameras (as well as cameras I now own). A post on Imaging-Resource.com says,

“In our initial press briefing, Sony made quite a big deal about its new sharpening algorithms, designed to reduce what they call “outlines” — which we’ve called “halos” for some years now. The images shown in that briefing were certainly impressive, but it’s hard to judge when there’s no standard of comparison against other cameras shooting the same scenes under controlled conditions. We had to see how it did on our own standardized studio shots before we could pass final judgment.

We’ve now done just that, and have to say we’re pretty well blown away by what we saw. Check our Sony A7R review page for our initial image comparison analysis…”

I will compare objective measurements from Log F-Contrast chart images. These provide a useful quantitative supplement to Imaging-Resource’s subjective (but vital) camera comparisons that use still-life images, enabling us to accurately asses Sony’s claim of sharpening without “halos”, to examine how noise reduction affects fine detail, and to compare different types of cameras. I’ll report on cameras of particular interest to me as well as cameras that I own.

| Sony A7R | Intriguing because of its high resolution (36-megapixels), small size and light weight for a full-frame camera, and lack of mirror. Small pixel size (relative to total field) allows anti-aliasing filter to be eliminated. |

| Pentax D645 | Standard of comparison. Medium format. Large, heavy and relatively expensive. Known for excellent image quality. |

| Nikon D800 | Popular 36-megapixel full-frame DSLR. (A D800E image was not not available.) Heavier and larger than the A7R. |

| Canon EOS-6D | 20-megapixel DSLR. I own one. Makes excellent images using off-camera raw conversion. |

| Panasonic DMC-G3 | 16-megapixel mirrorless interchangeable camera with eye-level electronic viewer (which I appreciate because I can’t focus well on regular screens without reading glasses). |

| Panasonic DMC-LX7 | 10-megapixel high-end compact with Leica f/1.4 zoom lens. |

| Sony RX100 II | 20-megapixel high-end compact with Zeiss f/1.8 zoom lens. (Hits a sweet spot for size vs. quality) |

| Fuji XE-2 | 16-megapixel mirrorless interchangeable camera, eye-level electronic viewer, Fuji X-Trans sensor, which allows anti-aliasing filter to be eliminated. |

| Sigma DP1 Merrill | 14.8-megapixel compact camera with Foveon APS-C-size sensor. No Color Filter Array or anti-aliasing filter. Non-interchangeable prime lens. |

My quest may be familiar to many of you: a small lightweight camera that can produce images comparable to the large-format prints by Ansel Adams and Edward Weston that I saw at the George Eastman House when I was growing up in Rochester, NY. In a word, I’m looking for the holy grail— at an affordable price. A modest quest.

Imaging-Resource.com

Imaging-resource.com publishes outstanding camera reviews, indexed on their Camera Reviews page. To find the Log F-Contrast images, go to a review page (here for the Sony A7R), click on the Samples tab, then scroll down (about 3/4 of the way to the bottom for the A7R). Look for the Multi Target image. Click on a thumbnail taken at a low ISO speed to see the camera’s ultimate capability. Later you may want to load images taken at higher ISO speeds to see how signal processing changes the results (you’ll almost always see additional noise reduction that tends to reduce sharpness, especially in low-contrast regions). Here is an image Imaging Resource’s Multi Target, showing the Log F-Contrast chart just below the center.

Imaging-Resource Multi Target image

The Log F-Contrast chart

The Log F-Contrast chart increases in spatial frequency from left to right and decreases in contrast from top to bottom. (Actually the square of the contrast is proportional to the distance from the bottom, so contrast in the middle is 1/4 of the contrast at the top. This makes the chart look much better.)

Log F-Contrast chart image

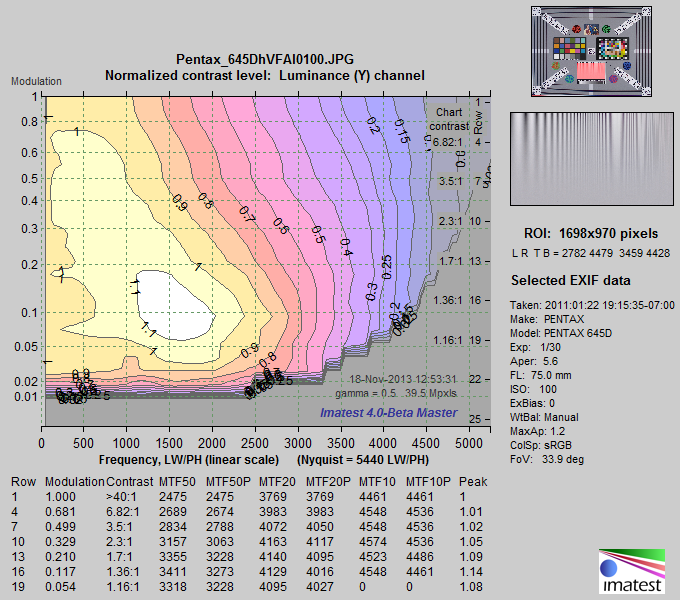

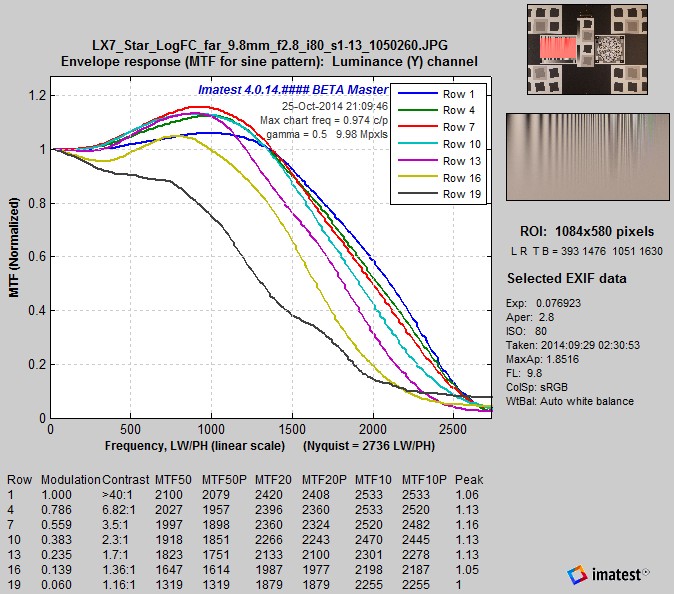

Details of running the Log F-Contrast are given in Log F-Contrast instructions. We focus on results here. The image below shows the normalized MTF* for several rows in the chart from near the top (row 1 of 25) to about 80% of the way to the bottom (row 19 of 25). (MTF curves for rows below this are unreliable due to noise and low contrast.)

*MTF (Modulation Transfer Function), which is generally equal to SFR (Spatial Frequency Response)

is a measure of image sharpness, described in Sharpness: What is it and how is it measured?

Sony Alpha A7R

Sony A7R: Normalized MTF for bands from just below the top to near the bottom

Sony A7R: Normalized MTF for bands from just below the top to near the bottom

MTF (i.e., sharpness) is fairly consistent for rows 1 through 10 (the relatively high contrast portion near the top of the image), with no significant sharpening peaks (Sony’s claim of no “halos” is well-justified). The long MTF ramp is an indication of nicely done sharpening. Sharpness starts to fall at row 13 (1.7:1 contrast), and drops significantly for rows 16 and 19.

This is the result of noise reduction, which is equivalent to lowpass filtering (smoothing), and which depends on image content in most consumer cameras. Noise reduction is minimum (and its inverse, sharpening, i.e., high frequency boost, is maximum) near contrasty features— near the top of the chart. Noise reduction is strongest in smooth areas— in the absence of contrasty features— near the bottom of the chart.

|

Comparison with slanted-edge measurements Results from Log F-Contrast illustrate how sharpening increases with feature contrast in the JPEG output files of most consumer cameras. Slanted-edges tend to maximize sharpening, especially if they are contrasty. Imaging-Resource has an A7R image of the ISO-12233 chart, which has several high-contrast edges, making for a convenient comparison. The average edge and MTF are shown on the right. Results are quite close to row 1 of the Log F-Contrast results (blue curve, above) up to about 3000 LW/PH, but the slanted edge has higher MTF at higher spatial frequencies. It has quite a lot of energy above Nyquist, which is characteristic of a system with no anti-aliasing filter + a very sharp lens. This can be an indicator of aliasing (color moire), but none is visible in the image. This is very impressive performance. |

|

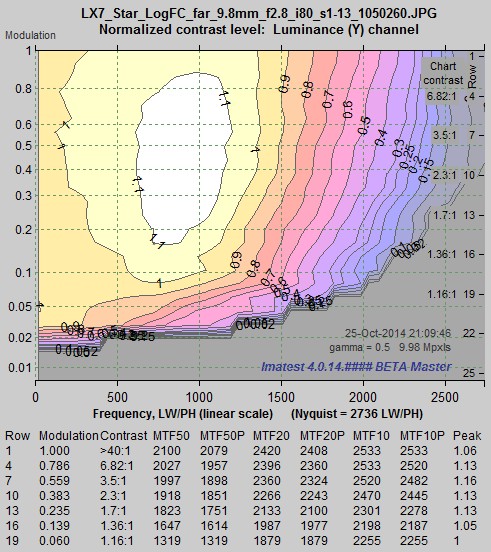

Since the multiple MTF image (above) is somewhat difficult to interpret, Imatest produces a contour plot of normalized contrast (MTF) over the image. This plot clearly shows how MTF varies with chart contrast.

Sony A7R: Normalized contrast plot

This display is clearer than the MTF plot above: it contains results for all rows with sufficient contrast for MTF to be measured. It’s much easier to see how MTF drops as contrast decreases. It also displays key summary metrics: MTF50 (the spatial frequency where contrast falls to 50% of its low frequency value), MTF50P (the spatial frequency where contrast falls to 50% of its peak value), and comparable numbers for 20 and 10% contrast (which are more closely related to “vanishing resolution”). MTF50P is the most robust summary metric than MTF50: it is less sensitive than MTF50 to strong sharpening (not present here, but significant in the Canon EOS-6D).

Significant texture loss due to noise reduction is visible for modulation under 0.2 (chart contrast ratio under 1.7:1). This typical behavior for JPEG processing. If you want this detail you’ll have to convert raw images off the camera (with noise reduction turned down). The MTF50P values over 3,000 Line Widths/Picture Height are outstanding, particularly considering that there is no sharpening peak. With good technique you can expect razor-sharp 24×32 inch prints (the largest you can print in this format on a 24-inch printer)— and you can go a lot larger with excellent quality. How many photographers actually make larger prints?

Regarding Sony’s claim of an excellent sharpening algorithm with no (significant) halo, we know from slanted-edge analysis that MTF (spatial frequency response) peaks above 1 correspond to halos near edges, and that the strength of the halo is well correlated with the strength of the peak. Halos corresponding to MTF peaks under 1.1 (10% above the low frequency MTF) are generally too weak to be objectionable. So the verdict on the Sony A7R is yes, it lives up to its claims, but it’s hardly unique in that regard: the Pentax 645D, the Nikon D800 and some others (but notably not the Canon EOS-6D), also have excellent sharpening without a significant sharpening peak.

Pentax 645D

The 40-megapixel medium-format Pentax 645D is the camera to beat.

Pentax 645D: Normalized contrast plot

Pentax 645D: Normalized contrast plot

(Note that the horizontal scales are different for each plot: the maximum frequency is the Nyquist frequency.)

At high contrast levels the Sony does indeed beat the Pentax! But the Pentax appears to have NO noise reduction: MTF doesn’t drop until modulation gets down to 0.03— an extremely low level. The Pentax wins for reproducing extremely fine texture at modulation levels below 0.2. I would guess that a properly processed raw image from the Sony A7R would have comparable low-level detail (see the RX100 II raw image, below), though there might be more noise (which might not be objectionable at this ISO speed).

The Sony holds its own very well against the Pentax, which is particularly impressive considering its size, weight, and cost. The difference would only be noticeable in very large prints.

Nikon D800

Unfortunately I couldn’t find a Multi Target image for the D800E (the version without the anti-aliasing (optical lowpass) filter). This would have made for a much better comparison.

Nikon D800: Normalized contrast plot

(Note that the horizontal scales are different for most plots, but this is the same as the Sony A7R.)

Signal processing is quite similar to the Sony A7R, but MTF frequencies are about 15% lower, which is what you’d expect from the added anti-aliasing (optical lowpass) filter. We would expect performance comparable to the A7R with the D800E, which doesn’t have an anti-aliasing filter. Of course the lens could have some effect, though excellent lenses at or near optimum aperture have been used in both cases (and in other Imaging-Resource images). The lens is probably not the dominant factor here.

Canon EOS-6D

Canon’s JPEG processing— and I mean sharpening, not JPEG compression— masks the 6D’s full performance potential. (It could probably be corrected in firmware if Canon wanted.) To begin with, the image is strongly oversharpened. Halos will be clearly visible in moderately large prints (as they are in IR’s image). And the sharpening radius is 2, which boosts middle but not the highest spatial frequencies (explained here). A sharpening radius of 2 is good for moderate enlargements with average quality lenses, but it’s hardly optimum when maximum quality is required. I never use JPEGs for high quality prints from the 6D; I always convert from raw and sharpen with a smaller radius with excellent results— I’ve made some outstanding 16×24 inch prints from the 6D.

Canon’s JPEG processing— and I mean sharpening, not JPEG compression— masks the 6D’s full performance potential. (It could probably be corrected in firmware if Canon wanted.) To begin with, the image is strongly oversharpened. Halos will be clearly visible in moderately large prints (as they are in IR’s image). And the sharpening radius is 2, which boosts middle but not the highest spatial frequencies (explained here). A sharpening radius of 2 is good for moderate enlargements with average quality lenses, but it’s hardly optimum when maximum quality is required. I never use JPEGs for high quality prints from the 6D; I always convert from raw and sharpen with a smaller radius with excellent results— I’ve made some outstanding 16×24 inch prints from the 6D.

Canon EOS-6D: Normalized contrast plot

(Note that the horizontal scales are different for each plot: the maximum frequency is the Nyquist frequency.)

Despite the sharpening, MTF50P is well below that of the Sony A3R. This is because of the way Canon’s sharpening (with radius = 2) works, boosting middle but not high spatial frequencies, and partly because the Sony has more pixels combined with an excellent lens and no anti-aliasing filter. With raw images the difference would be reduced, but the Sony is the clear winner here.

Panasonic Lumix DMC-G3

The G3 is a 16-megapixel Micro Four-Thirds mirrorless interchangeable system camera that I’ve had since 2011 (two years ago as I write this). It’s much smaller and lighter than than APS-C or full-frame DSLRs; it’s easier to carry on long trips.

Panasonic Lumix DMC-G3: Normalized contrast plot

(Note that the horizontal scales are different for each plot: the maximum frequency is the Nyquist frequency.)

MTF50P in the range of 2200 is respectable for a camera in this category, though not in the same class as the Sony, Pentax, and Nikon cameras above. There is a moderate sharpening peak— not enough to be objectionable in any viewing conditions. Noise reduction is strong for modulation under 0.2— pretty similar for most of the cameras in this post, except for the Pentax. I’ve made some very nice 18×24 inch prints with this camera, using an off-camera raw converter (though they’re not quite as good as processed raw images from the EOS-6D— not JPEGs).

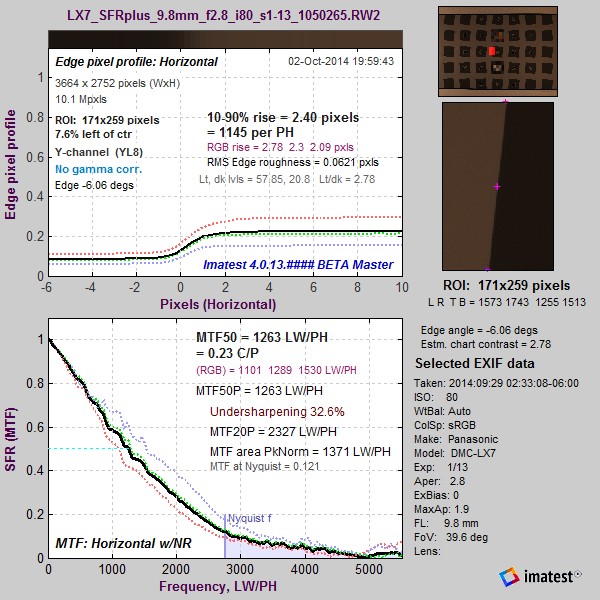

Panasonic Lumix DMC-LX7

Nobody, and I mean nobody, will take you for a serious photographer if you carry one of these little toy-like cameras. But appearances can be deceiving. A toy it is not. This 10-megapixel point and shoot (with an f/1.4 Leica zoom lens) is capable of some pretty impressive image quality for a slightly larger than pocketable point-and-shoot. I carry this camera when I’m feeling lazy but want to make fine images should the opportunity arise.

Panasonic Lumix DMC-LX7: Normalized contrast plot

(Note that the horizontal scales are different for each plot: the maximum frequency is the Nyquist frequency.)

Sharpening is rather strong, with the sharpening peak reaching 1.37, which might be objectionable in large prints. (Raw is available.) It might be a good idea to decrease sharpening from the default level. MTF50P above 2000 at high contrast levels is excellent for a 10 megapixel camera, thanks to an excellent lens and strong sharpening with radius R = 1 (which does a much better job than the R = 2 sharpening in the EOS-6D, as explained in the Sharpening page).

Sony RX100 II

My London son is absolutely delighted with his 20-megapixel RX100 (with a Zeiss f/1.8 zoom lens), which he first used to take pictures of the aurora borealis in Iceland (an application that requires excellent low light performance). It’s small enough to fit in most pockets (slightly smaller than the LX7). We’ll look at the RX100 II, which is the current model. It has a large (1-inch) sensor, unusual for such a small camera.

Sony RX100 II: Normalized contrast plot: JPEG image

(Note that the horizontal scales are different for each plot: the maximum frequency is the Nyquist frequency.)

Note that MTF50P for modulation above 0.1 is better than the Canon EOS-6D, despite the Canon’s strong sharpening. Impressive for a camera you can put in a pocket! Only at very low contrast does the big Canon out-perform the little Sony. The Sony is also quite a bit better (higher MTF50P with less sharpening) than the slightly-larger but less expensive Panasonic LX7.

The reduced MTF at very high modulation levels (above 0.8) is caused by signal processing— I’m not sure why. Imaging-Resource has supplied a raw (ARW) version of this image, which we converted using dcraw, which has no sharpening or noise reduction. Here is the plot, which shows a MTF falloff characteristic of an unsharpened image and vertical contour lines characteristic of no noise reduction

Sony RX100 II: Normalized contrast plot: raw (ARW) image converted by dcraw

The Sony RX100 II hits a real sweet spot: it’s small enough to fit in most pockets and capable of making serious enlargements. It may well meet the holy grail requirements. If nothing else, the new crop of cameras removes technical excuses for not making great images. (It’s still not easy to make iconic images, mostly because billions of images are made daily, and it’s hard not to get lost in the crowd. But that issue is outside the scope this post.)

Fuji X-E2 / X-M1

The recently-released (November 2013) Fuji X-E2 is a mirrorless camera with interchangeable lenses (very well reviewed) that features an eye-level electronic viewfinder. Since images are not available, we display results for the X-M1, which has an identical 16 megapixel X-Trans sensor that features a new color filter array (CFA) pattern that reduces aliasing, allowing the anti-aliasing (Optical Lowpass) filter to be eliminated for improved resolution.

Fuji X-M1: Normalized contrast plot

MTF is significant all the way out to the Nyquist frequency (right of the graph), which is consistent with the absence of an anti-aliasing filter. Sharpening is very conservative, with a maximum peak of 1.01. MTF values would improve with additional (small radius) sharpening. Noise reduction is also conservative, not starting until modulation drops under 0.1, which is lower than most of the cameras in this post. The New York Times just published an article about Fujifilm and the X-series, which has sold very well in a tough market.

Sigma DP1 Merrill

I won’t be purchasing this compact 14.8 megapixel camera because it has a fixed lens, and I want a zoom— available on several competitive cameras. I’m including it because I’m intrigued by the Foveon sensor, which has no color filter array and no anti-aliasing (Optical Lowpass) filter, and can potentially outperform Bayer sensors with comparable pixel count.

Sigma DP1 Merrill: Normalized contrast plot

Moderate sharpening (maximum peak = 1.22) is not likely to be objectionable. MTF is outstanding for a 14.8 megapixel sensor, and it remains significant right out to the Nyquist frequency— a clear benefit to not having an anti-aliasing filter (and having a fine lens). The low amount of noise reduction is also notable: It doesn’t really start until modulation drops below 0.05 (1.16:1 contrast). This would contribute to Sigma’s reputation (attributed mostly to the Foveon sensor) of maintaining exquisite fine detail. Now, if only it had a zoom lens like the RX100…

Summary

To distill the above results, we have created a table of summary metrics.

- MTF50P, LW/PH, row 4. The best overall sharpness metric for contrasty areas (where modulation ≈ 0.7). MTFnnP is less sensitive to oversharpening than MTFnn.

- MTF20P, LW/PH, row 4. A metric that approximates vanishing resolution in contrasty areas.

- MTF50P, LW/PH, row 16. The best sharpness metric for low-contrast areas (modulation ≈ 0.12).

- MTF20P, LW/PH, row 16. A metric that approximates vanishing resolution in low-contrast areas.

- Peak (maximum) A measure of the maximum sharpening MTF overshoot. Sharpening is excessive if too high.

| Measurement→ Camera↓ |

MTF50P, row 4, High Contrast |

MTF20P, row 4, High Contrast |

MTF50P, row 16, Low Contrast |

MTF20P, row 16, Low Contrast |

Peak (max) |

Comments |

| Sony A7R 36 Mpxl |

3338 | 4163 | 2603 | 3379 | 1.05 | No sharpening peak, as claimed. Outstanding MTF. |

| Pentax 645D 40 Mpxl |

2689 | 3983 | 3273 | 4016 | 1.14 | MTF highest at 0.1 modulation (strange). Best fine detail; no noise reduction. |

| Nikon D800 36 Mpxl |

3106 | 3780 | 2521 | 3049 | 1.01 | D800E would have been nicer. |

| Canon EOS-6D 20 Mpxl |

2434 | 2838 | 1954 | 2374 | 1.68 | Extreme sharpening, radius = 2. Use raw for best results. |

| Panasonic G3 16 Mpxl |

2219 | 2571 | 1825 | 2219 | 1.17 | |

| Panasonic LX7 10 Mpxl |

2103 | 2394 | 1589 | 1982 | 1.37 | Strongly sharpened, radius = 1; near acceptable limits. Good performance. Least expensive of the batch. |

| Sony RX100 II 20 Mpxl |

2531 | 3039 | 1973 | 2408 | 1.11 | Outstanding performance for such a tiny package. |

| Fuji X-E2 16 Mpxl |

2255 | 2778 | 2103 | 2568 | 1.03 | Low noise reduction; extended MTF. |

| Sigma DP1 Merrill 14.8 Mpxl |

2435 | 3104 | 2333 | 3031 | 1.22 | Exceptionally low noise reduction: will render very fine detail. |

![Log_F-C_IR_camera_comparison_barchart]() Final comments and observations

Final comments and observations

All of the cameras discussed in this page make excellent images.

- The 36-40 megapixel cameras (Sony, Pentax, Nikon) are capable of outstanding performance, but you won’t see it unless you

- Use the finest lenses: Ordinary consumer-grade lenses can rarely take advantage of these very high resolution sensors.

- Print large: At 13×19 inches (common for home printers) you won’t see a difference.

- Use good technique: keep the camera steady during exposure and set the lens close to optimum aperture.

If you can’t meet all of these criteria, you can save a lot of money (and weight and storage) by sticking with a camera with 20 or fewer megapixels. 10-20 megapixel cameras with good optics are capable of making phenomenally fine 13×19 inch (or larger) prints, and they’re smaller, lighter, and less expensive. So why bother unless you want to look like a serious photographer (and maybe strain your neck in the process)?

- I’ve always been suspicious that mirrors compromise sharpness, especially for long lenses, though I haven’t done a controlled study. The lack of a mirror was one of the key advantages of the old Leicas (I still have my M2R), and it’s still an advantage, even though mirrors have improved (my EOS-6D is much smoother than my film SLRs). This makes the Sony A7R very attractive compared to the Nikon D800(E) and Pentax D645 (which is considerably more expensive and heavier). But I won’t run out to buy an A7R because few lenses are available (as of November 2013). They’ll be coming out over the next year, and I expect them to be excellent: I’ll be watching the reviews.

- Smaller mirrorless cameras (APS-C and Micro Four-Thirds from Panasonic, Sony, Olympus, Fuji, and others) are very appealing alternatives if you don’t need to make giant prints.

- Although the Panasonic LX7 is very good, the more expensive Sony RX100 II hits a real sweet spot when you balance its size, weight, and image quality. It’s a bargain for what it does. My son convinced two of his friends to buy them.

- Most of these cameras have fairly strong noise reduction that results in loss of fine texture. (The Pentax, Fuji, and Sigma are the exceptions.) Whether or not this is a good thing is a matter of taste. You can recover the texture by turning down the noise reduction (if an adjustment is available) or by performing raw conversion off the camera using little or no noise reduction.

Sony has planted a bit of lust in my brain. I’ll be watching the growth of the A7R system. One day I may make a very rash, very large purchase (with a lot of thought and analysis behind it).

Slanted-Edge versus Siemens Star: A comparison of sensitivity to signal processing

This post addresses concerns about the sensitivity of slanted-edge patterns to signal processing, especially sharpening, and corrects the misconception that sinusoidal patterns, such as the Siemens star (included in the ISO 12233:2014 standard), are insensitive to sharpening, and hence provide more robust and stable MTF measurements.

To summarize our results, we found that the Siemens Star (and other sinusoidal patterns) are nearly as sensitive as slanted-edges to sharpening, and that slanted-edges give reliable MTF measurements that correspond to the human eye’s perception of sharpness.

The relatively high contrast of the Siemens Star (specified as >50:1 in the ISO standard) makes measurement results somewhat sensitive to clipping and nonlinearities in tonal response. Low contrast slanted-edges (4:1 in the ISO standard) closely approximate features our eyes use to perceive sharpness, and they rarely saturate images. Measurements based on slanted-edges accurately represent real-world performance and are well suited for testing of cameras in wide range of applications, from product development to manufacturing.

Full details of the comparison are in the Slanted-Edge versus Siemens Star and the Slanted-Edge versus Siemens Star, Part 2 pages, where we compare MTF calculation results from slanted-edge patterns, which are performed by Imatest SFR, SFRplus, and eSFR ISO, to results from sinusoidal patterns, which are performed by the Imatest Star chart (for the Siemens star) and Log F-Contrast modules, paying special attention to the effects of signal processing, especially sharpening and noise reduction (which are performed by software or in-camera firmware).

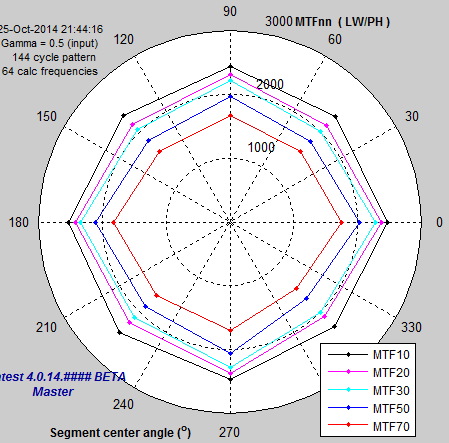

Most consumer cameras sharpen images in the presence of contrasty features (like edges) and reduce noise (lowpass filtering; the opposite of sharpening) in their absence. The details of this processing are more complex, or course, and vary greatly for different cameras. For this reason we tested six cameras. The primary example is a camera whose image sensor and pixel size is between that of camera phones and DSLRs: the Panasonic Lumix LX7 (a high-end point-and-shoot with an excellent Leica zoom lens and optional raw output). We also analyze a camera phone with excessive sharpening and four additional cameras.

Raw results

Quick summary: Raw MTF results are nearly independent of chart type, contrast level, and ISO speed.

|

Raw images were converted to demosaiced TIFF files using dcraw, which applies no sharpening or noise reduction. Output gamma was set to 1.0 (Linear; i.e., no encoding gamma). No signal processing was applied apart from demosaicing. The converted raw images can be considered to be “original” unprocessed images. In comparing raw results from slanted edges of varying contrast (2:1, 4:1, and 10:1) with images of the Log F-Contrast and Siemens Star charts, we found that raw results are nearly independent of chart type, contrast level, and ISO speed. |

Raw image @ ISO 80; >10:1 edge Raw image @ ISO 80; >10:1 edge |

The monotonically-decreasing shape of the MTF curve (clearer on the less noisy image on the left) is characteristic of unsharpened images. Sharpened images have a characteristic boost in the middle frequencies (around half the Nyquist frequency) described in more detail here. We will use this curve for comparison with sharpened images, below.

Slanted-edge results

This is typical of consumer cameras, which tend to have more sharpening near contrasty features and less sharpening (often none at all, or noise reduction (lowpass filtering)— which is the inverse of sharpening) in the absence of contrasty features. The sharpening boost is very clear for slanted-edges (and indeed represents what happens to edges in real world images). Next we look at sharpening in sinusoidal images (Log F-Contrast and the Siemens Star).

Sinusoidal (Log F-Contrast and Siemens star) results

Both the Log F-Contrast chart and the Siemens Star have sinusoidal patterns, and hence have similar MTF response characteristics (at similar contrast levels). The key differences:

- The Log F-Contrast measures MTF in a single direction (horizontal), but has a range of contrast levels.

- The Siemens Star measures MTF in multiple directions, but has a single (high) contrast level (specified as > 50:1 ISO 12233:2014).

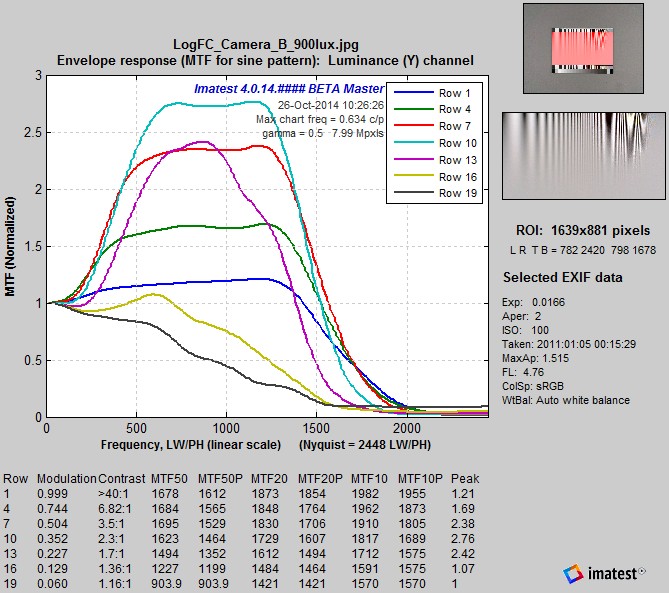

MTF for Log F-Contrast, rows 1-19 in steps of 3 (of 25), Camera JPEG @ ISO 80 |

MTF contours for Log F-Contrast image, Camera JPEG @ ISO 80 |

The two plots (above) display the same MTF results for the Log F-Contrast chart in two different formats: actual MTF curves (above left) and MTF contour plots (above right). The contour plots are clearer for observing the change in response with contrast, but the MTF curves are better for comparing with the Siemens Star chart results on the right. Sharpening is present in rows with modulation levels greater than 0.1. it drops very rapidly for modulation below 0.1: it’s mostly gone by Row 19 (modulation = 0.06).

These results show that a significant amount of sharpening is present for high contrast sinusoidal patterns.

A camera phone with extreme sharpening

In early 2013 we received images from a camera phone that had an extreme— and highly atypical— amount of sharpening. We described the results of testing this image in Dead Leaves measurement issue. We did not receive a Siemens star image, but, as we have shown in Slanted-Edge versus Siemens Star, the response of Row 1 of the sinusoidal Log F-Contrast chart should be very close to the response of the (high contrast) Siemens Star. Here are the Log F-Contrast results.

MTF for extremely sharpened image: MTF for extremely sharpened image:Log F-Contrast, rows 1-19 in steps of 3 (of 25), Camera JPEG @ ISO 80 |

MTF contours for extremely sharpened image: MTF contours for extremely sharpened image:Log F-Contrast, Camera JPEG @ ISO 80 |

For this camera, the MTF of the sinusoidal Log F-Contrast chart (whose response for the high contrast rows near the top should be similar to the Siemens Star pattern) is remarkably sensitive to sharpening— more than expected. The maximum peak response of 2.8 was for modulation = 0.3 (approximately 2:1 contrast). For higher contrast levels (rows 1-10) sharpening causes the Log F-Contrast chart to saturate, i.e., clip (go to pure white). This saturation is visible in the flat tops of the MTF curves (above-left) and in a crop of the image in the Slanted-Edge versus Siemens Star documentation page.

The Siemens Star, which has high contrast (>50:1; comparable to the top row of the Log F-Contrast chart), would have a lower MTF peak and a higher MTF50 for this camera because of strong saturation, not because it is less sensitive to signal processing. Measurements would look unrealistically good for this system, despite the severe (and highly visible) oversharpening.

Summary

Our perception of image sharpness is closely correlated to the appearance of edges (contrast boundaries) in the image. This is a key reason for the use of edges in MTF measurement (the slant makes the result insensitive to sampling phase). Saturation was often a problem with the edges in the old ISO 12233:2000 chart, which had a specified minimum contrast of 40:1 (80:1 or more was typical). This problem has been resolved in the new ISO 12233:2014 standard (released in February 2014; implemented in SFRplus and ISO 12233:2014 Edge SFR charts), which specified an edge contrast of 4:1. Not only are these edges unlikely to saturate, their contrast is similar to real-world features that affect our perception of sharpness. Measurements based on low-contrast slanted-edges accurately represent imaging system performance.

| Results from the six cameras we tested (two in Slanted-Edge vs. Siemens Star (Part 1) and four in Part 2) show that MTF results for both slanted-edges and Siemens stars (as well at other sinusoidal patterns) are sensitive to sharpening (and that there tends to be more sharpening for higher contrast features, although some cameras may reduce sharpening at the very highest contrast levels). We also found that the Siemens star was more affected by saturation and non-uniform signal processing in high contrast regions. Saturation tends to flatten the Siemens star MTF response— to reduce peak MTF, making it appear that it is less sensitive to sharpening, when in fact the MTF measurement is questionable. |

The sensitivity of slanted edges to sharpening is not a drawback because it is not excessive and it represents the way we perceive image sharpness.

| Advantages | Disadvantages | |

| Slanted- Edge |

More efficient use of space (allows a detailed map of sharpness over the image surface). Faster. Robust in the presence of optical distortion. |

Slightly more sensitive to software sharpening, depending on camera firmware. Measures only near-horizontal and vertical edges. |

| Siemens Star |

Slightly less sensitive to software sharpening. Measures MTF for a variety of angles (not just near-horizontal and vertical), though these measurements seem to be affected only by demosaicing and sharpening. |

Requires more space. Slower. Saturation in highly oversharpened images is not obvious from measurement results. |

Conclusions

| The slanted-edge pattern provides accurate and robust measurements. Its speed and efficient use of space (which allows detailed sharpness maps) makes it the best choice for measuring the performance of a wide variety of camera systems and applications, from product development to production testing. |

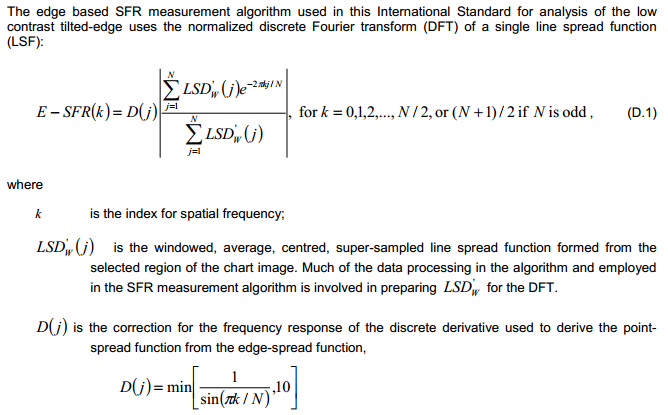

LSF correction factor for slanted-edge MTF measurements

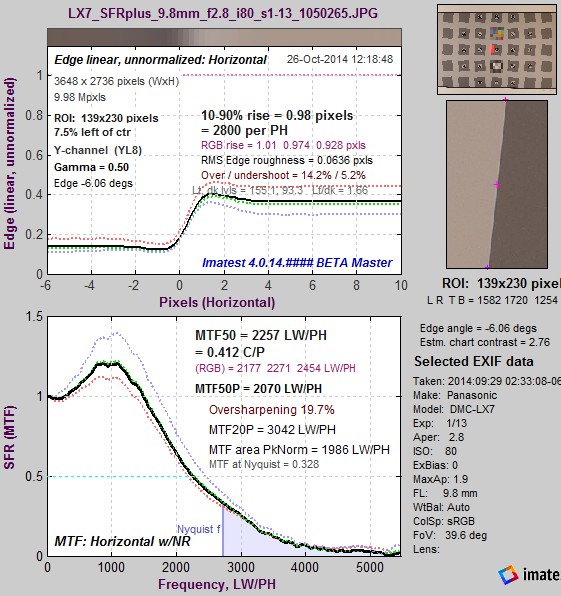

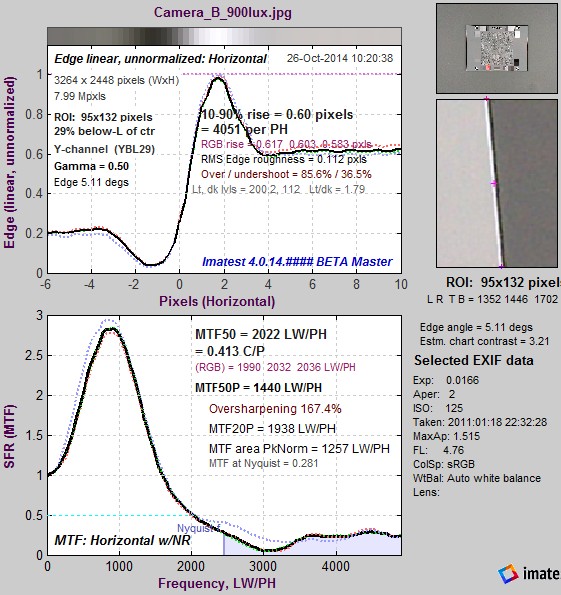

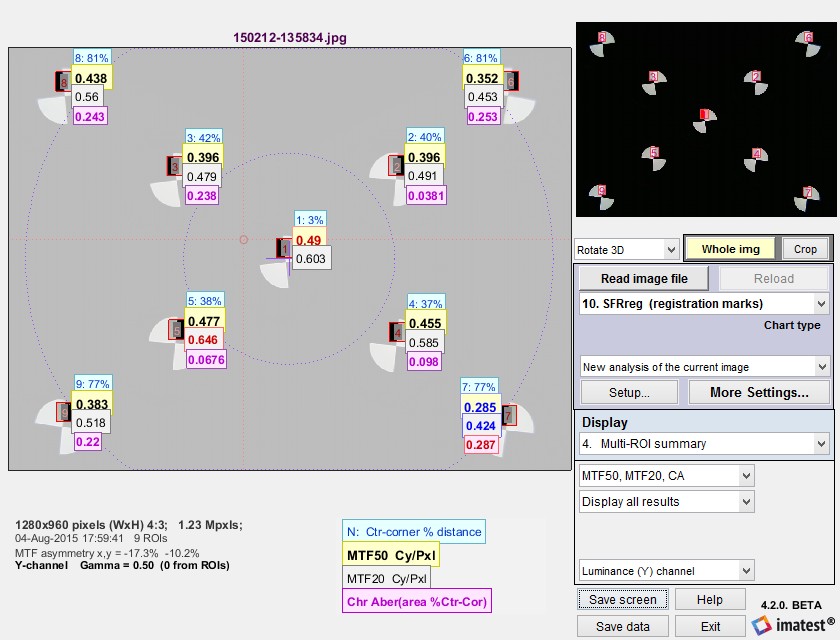

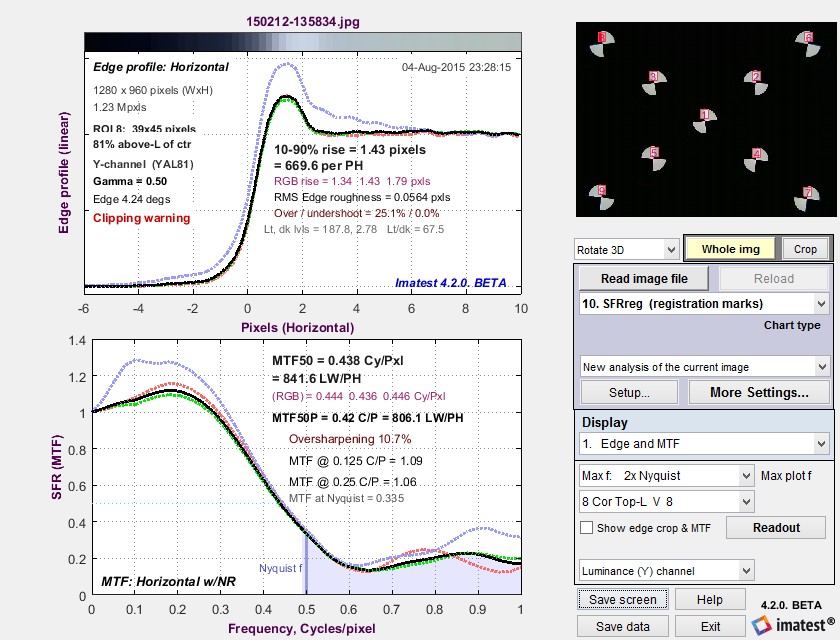

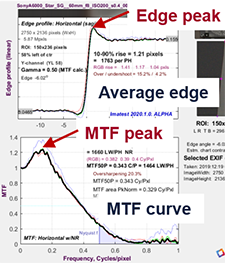

A correction factor for the slanted-edge MTF (Edge SFR; E-SFR) calculations in SFR, SFRplus, eSFR ISO, SFRreg, and Checkerboard was added to Imatest 4.1.2 (March 2015). This correction factor is included in the ISO 12233:2014 standard, but is not in the older ISO 12233:2000 standard. Because it corrects for an MTF loss caused by the numerical calculation of the Line Spread Function (LSF) from the Edge Spread Function (ESF), we call it the LSF correction factor.

The LSF correction factor primarily affects very high spatial frequencies beyond most of the energy for typical high quality cameras. But it does make a difference for practical measurements: MTF50 for a typical high quality camera (shown below) is increased by about 1.5%.

The correction factor was turned off by default in Imatest versions 4.1.n. It is turned on by default in versions 4.2+. It can be set (to override the default) by pressing Settings, Options III in the Imatest main window as shown below, then checking the box for the correction. We strongly recommend turning on the LSF correction factor, i.e., the box should be checked.

The equations

Here is the Edge SFR (MTF) equation from ISO 12233:2000, Annex C:

© ISO 2000 – All rights reserved

And here is the corresponding equation from ISO 12233:2014, Annex D. Note that E-SFR(k) is the symbol for edge-based SFR, not a subtraction:

© ISO 2014 – All rights reserved

The difference between the two equations is the LSF correction factor D(j) for the numerical differentiation used to calculate the Line Spread (not Point Spread*) Function from the Edge Spread Function. Note that D(j) is incorrect in the ISO 12233:2014 standard. It should read,

| *If you thought ISO standards were written by gods on Mount Olympus (the Greek version), the many misprints should set you straight. |

|

Numerical differentiation is a linear process with a transfer function The ISO 12233:2014 formula (D.8) for calculating the Line Spread Function LSF(x) from the 4x-oversampled Edge Spread Function ESF(x) is, where W(j) is a windowing function not relevant to this analysis. Note that the spacing between points in this calculation is 2 (4x-oversampled) samples = 0.5 pixels. This numerical difference formula may be rewritten,

The Fourier transform (FT) for a time (or spatial) shift is given in Wikipedia. For numerical differentiation, the Fourier transform is Noting that For pure differentiation, the Fourier transform is The correction factor is therefore Since Δx = a = 0.5 pixels for the 4x-oversampled signal, and frequency f = ω/(2π) has units of cycles/pixel, At the Nyquist frequency, fNyq = 0.5 Cycles/Pixel, |

Applying the correction factor

To apply (or disable) the LSF correction factor, click Settings (in the Imatest main window), Options III. Check or uncheck the Use LSF… checkbox as appropriate. The checkbox sets derivCorr in the [imatest] section of imatest-v2.ini to 0 (correction off) or 1 (correction on).

Options III window for applying or removing LSF correction factor

Options III window for applying or removing LSF correction factor

For most applications we recommend checking the box– turning on the correction factor. However, for industrial applications that use fixed pass/fail thresholds, consistency may be more important than accuracy. To prevent changes in yield and/or avoid modifications to the pass/fail specification, the box should remain unchecked and devCorr = 0 should be added to the [imatest] section of the ini file.

![slant_ideal_angle5.00_light200_dark_20]() Verification

Verification

To observe the effects of the LSF correction factor, we use an idealized edge, tilted 5 degrees, shown on the right. You can click on it to download it for your own testing.

The ideal edge increases uniformly from 0 to 1 over a distance of τ = 1 pixel.

|

The MTF of an ideal uniformly-increasing edge of width τ is the Fourier transform FT of its derivative, which is

If τ is the sampling rate (the same as Δx or a in the green box, above), The expected value of MTF at the Nyquist frequency (0.5 cycles/pixel) is |

Here are the results without and with the LSF correction factor. Note that gamma has been set to 1 because the idealized image is not gamma-encoded. The results with LSF correction are much closer to the expected value of 2/π = 0.6366. The difference is likely due to digital sampling and the numerical binning/oversampling process: the average oversampled edge shown in the figures below is slightly rounded. Also, the edge rotation correction that is not applied to edges slanted by less than 8 degrees. The edge rotation is not included in the ISO standard and so is not applied to edges that fall under the ISO standard algorithm.

Ideal edge, uncorrected MTF. Ideal edge, uncorrected MTF.MTF@Nyquist = 0.56. |

Ideal edge, LSF-corrected MTF. Ideal edge, LSF-corrected MTF.MTF@Nyquist = 0.624. |

|

The figure on the right, generated by MTF Compare (a postprocessor to MTF calculation programs for comparing MTF calculations), compares the uncorrected MTF of the ideal edge (blue) with the corrected MTF (burgundy). The black line is the uncorrected/corrected transfer function = 1/(correction factor D). It has the expected values of 0.9 (1/1.1107) at the Nyquist frequency (f = 0.5 C/P) and 2/π = 0.6366 at 2*Nyquist (1 C/P). |

MTF Compare Uncorrected vs. Corrected MTF Compare Uncorrected vs. Corrected |

The idealized image has much more energy above the Nyquist frequency than typical high quality images. Here is an example for a high quality camera showing the effects of the LSF correction factor on MTF50— the most commonly-used summary metric.

Typical edge, uncorrected MTF. Typical edge, uncorrected MTF.MTF50 = 0.355 Cycles/Pixel. |

Typical edge, LSF-corrected MTF. Typical edge, LSF-corrected MTF.MTF50 = 0.360 C/P: 1.41% higher. |

The difference may not be significant for many applications.

Consistency with older Imatest versions

The new correction factor is available in Imatest 4.1.2+, where it is turned off by default. The correction factor is turned on by default in Imatest 4.2+ releases.

You can override the default, i.e., turn the correction factor on or off, by clicking Settings (in the Imatest main window), Options III, and checking or unchecking the Use LSF… checkbox as appropriate. Once the setting is saved, it will be retained across all future versions unless changed by the user. The default calculation be selected unless you manually change it in Options III window.

We strongly recommend turning on the LSF correction factor, i.e., the box should be checked.

For industrial testing with pass/fail thresholds set up with the old calculation, we recommend either:

- adjusting your pass/fail specification to account for the measurement change

- continuing to use the old calculation by setting devCorr = 0 in the [imatest] section of the ini file

For example, on an ideal edge MTF at Nyquist/4 (0.125 C/P, a common KPI) is only increased by 0.64%, at Nyquist/2 (0.250 C/P) MTF is increased by 2.80%. On an actual sharp, RAW camera-phone image with Gamma=1, the MTF at Nyquist/4 is increased by 0.81%, MTF at Nyquist/4 is increased by 3.00%.

Measuring Multiburst pattern MTF with Stepchart

Measuring MTF is not a typical application for Stepchart— certainly not its primary function— but it can be useful with multiburst patterns, which are a legacy from analog imaging that occasionally appear in the digital world. The multiburst pattern is not one of Imatest’s preferred methods for measuring MTF: see the MTF Measurement Matrix for a concise list. But sometimes customers need to analyze them. This feature is available starting with Imatest 4.1.3 (March 2015).

Here is a crop of a multiburst pattern, generated by a Tektronix device:

Crop of Multiburst pattern: click on image for full-size pattern that you can download.

Running Stepchart with the multiburst pattern

Open Stepchart, then read the multiburst image. Make a rough region selection for the multiburst pattern— it will be refined as shown below. Then press Yes (not Express mode) to bring up the Stepchart settings window.

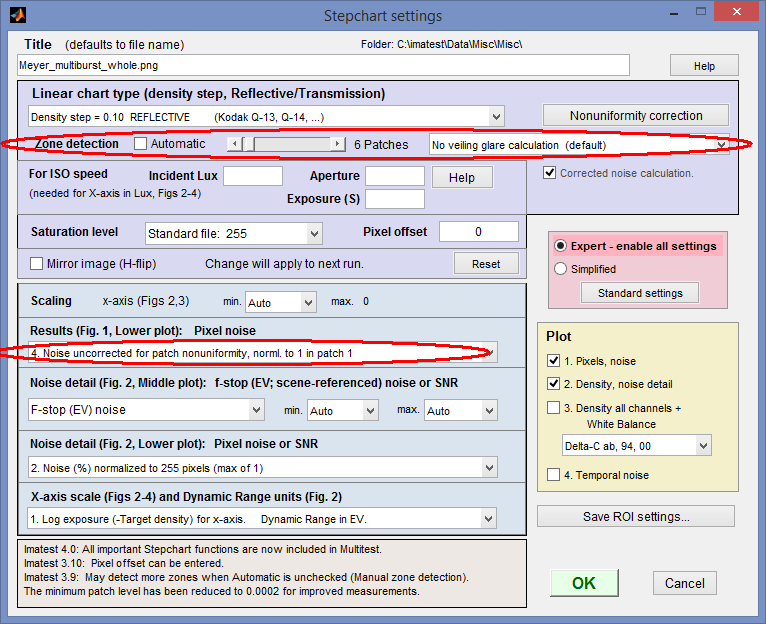

Stepchart settings for Multiburst MTF measurement

Stepchart settings for Multiburst MTF measurement

The key settings are circled in red. Automatic (Zone detection) should be unchecked and 6 patches should be selected using the slider (for this pattern; some multiburst patterns have as few as 5 zones). Under Results (Fig. 1, Lower plot), select 4. Noise uncorrected for patch nonuniformity, norml. to 1 in patch 1. Only Plot 1. Pixels, noise is relevant to the multiburst image. When settings are complete, click OK. If this is the first run for this size of image, the fine ROI adjustment window will appear.

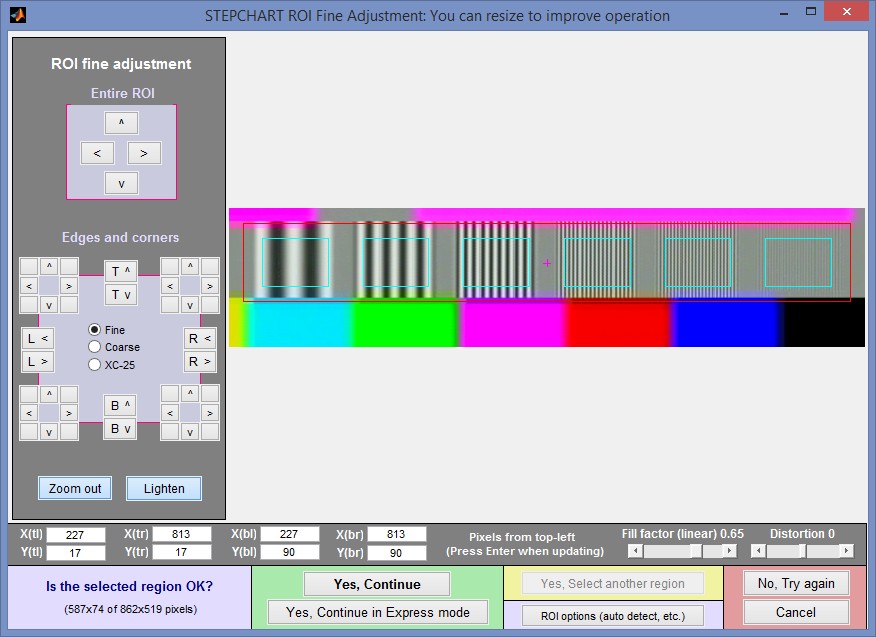

Fine ROI adjustment window for Multiburst pattern in Stepchart. May be enlarged.

Fine ROI adjustment window for Multiburst pattern in Stepchart. May be enlarged.

Results

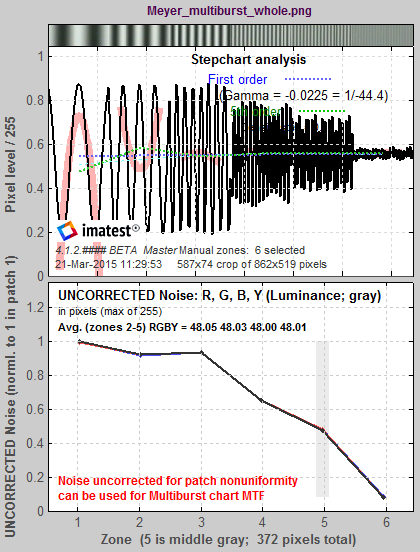

The standard deviation of the pixels in the patch, which is normally used to measure noise, is proportional to the MTF of the pattern in the patch (as long as it’s above the noise). To obtain an accurate result the patch nonuniformity correction is turned off (by setting Results (Fig. 1, Lower plot) to 4. Noise uncorrected…, as indicated above.

The key results are in the first Stepchart figure, most importantly in the lower plot, which contains the MTF for the patches.

Stepchart Multiburst results: MTF is in lower plot

Stepchart Multiburst results: MTF is in lower plot

|

There are several ways we could enhance this calculation, but we will only do them if their is sufficient interest.

|

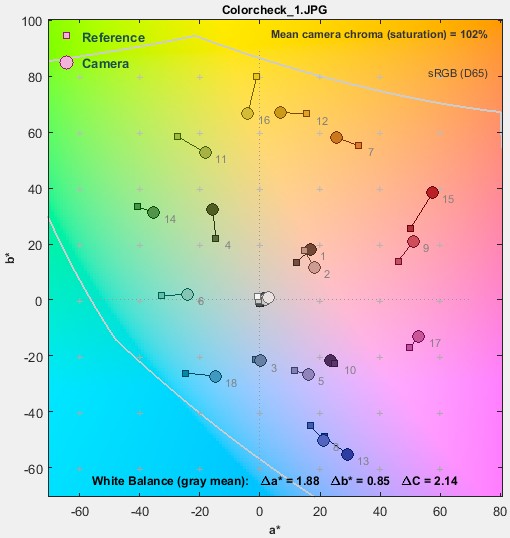

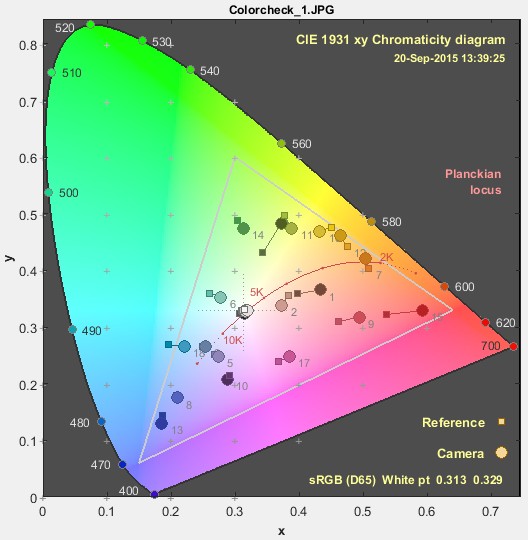

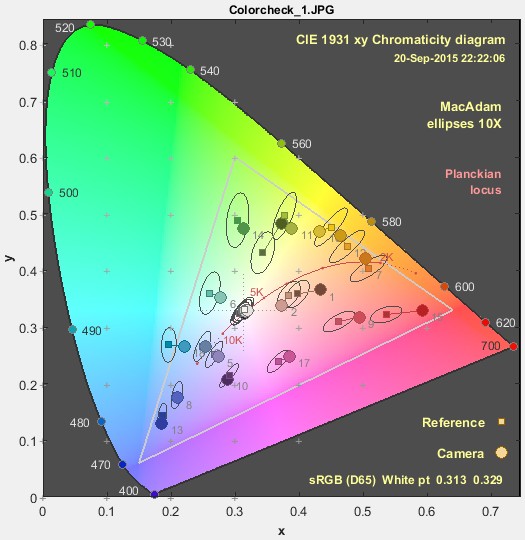

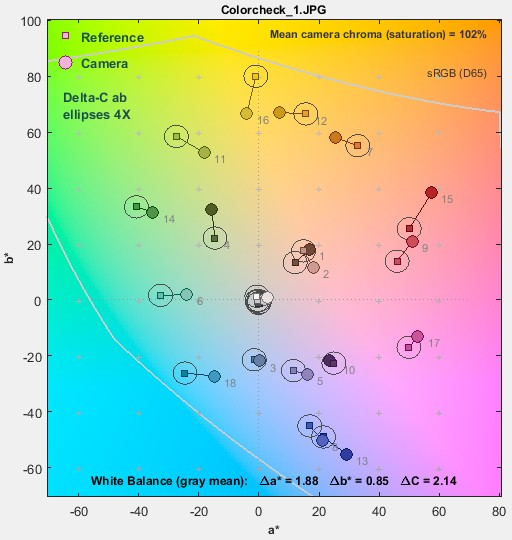

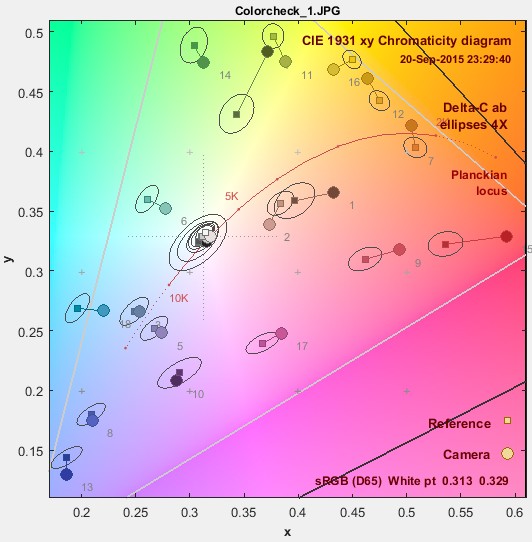

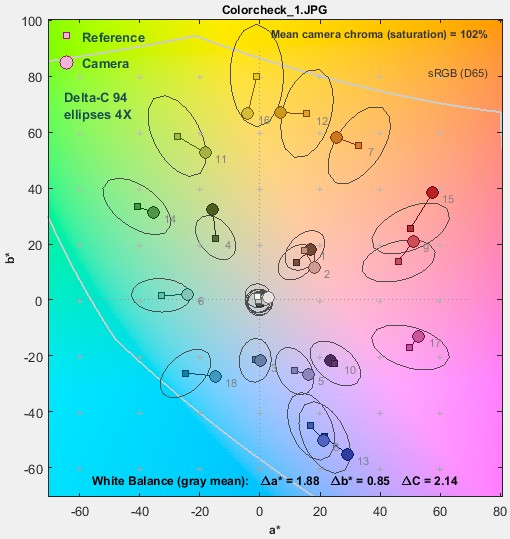

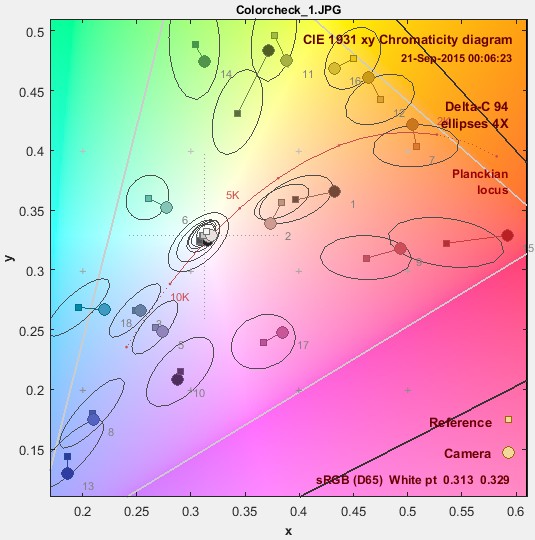

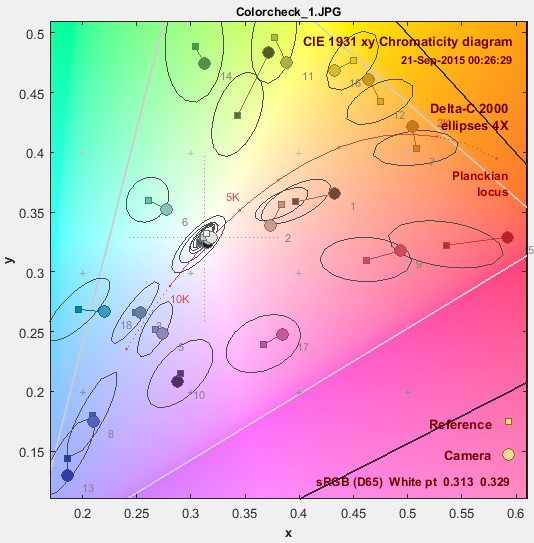

Color difference ellipses

Imatest has several two-dimensional displays for comparing test chart reference (ideal) colors with measured (camera) colors, where reference colors are represented by squares and measured values are represented by circles. The two most familiar representations— CIELAB a*b* and CIE 1931 xy chromaticity— are shown below. They are for the Colorchecker image, also shown below, where the upper-left of each patch is the reference color and the lower-right is the camera color.

|

|

| How different are the reference and camera colors in the Colorchecker image on the right, represented in these diagrams? |  |

Color differences and MacAdam ellipses

When these representations are viewed, the question naturally arises, “how different are the reference and camera colors?” Color differences can be quantified by several measurements— ΔEab, ΔE94, and ΔE00 (where 00 is short for 2000), where ΔE measurements include chroma (color) and luminance (brightness). If brightness (L*) is omitted, these measurements are called ΔCab, ΔC94, and ΔC00, where C stands for chroma (which includes a color’s hue and saturation). In this post we will discuss chroma differences. ΔCab = (a*2+b*2)1/2 (sometimes called ΔC) is the simple Euclidean (geometric) distance on the a*b* plane. It’s familiar but not accurate.

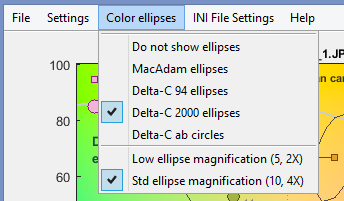

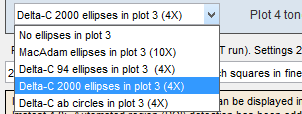

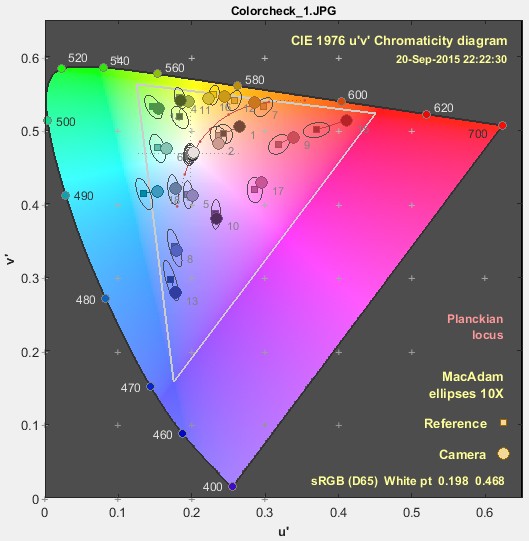

Starting with Imatest 4.2, Imatest’s two-dimensional chroma displays— CIELAB a*b*, CIE 1931 xy chromaticity, CIE u’v’ chromaticity, Vectorscope, and CbCr— can display ellipses that assist in visualizing perceptual color differences.

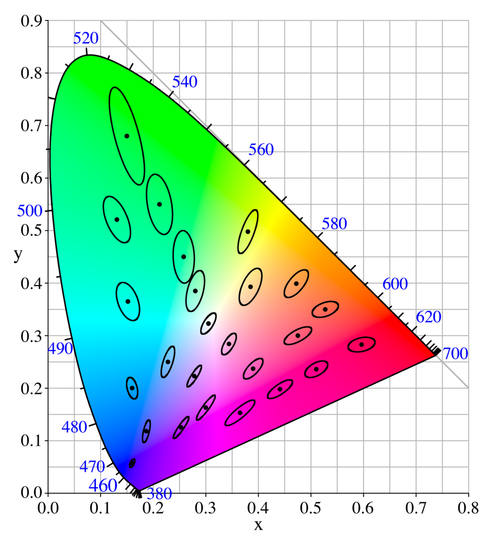

These ellipses were developed from the MacAdam ellipses, shown on the right.

MacAdam ellipses shown in the

CIE 1931chromaticity diagram,

magnified 10X (from Wikipedia)

The MacAdam ellipses were developed from a set of experiments performed at the University of Rochester in 1942, in which an observer tried to match pairs of colors, one fixed and one variable. The ellipse parameters are based on statistical variations in the matching, which are closely related to Just Noticeable Differences (JND). Twenty-five colors (whose xy values are shown as • in the illustration) were used.

Almost all images of MacAdam ellipses show the ellipses for the original 25 colors. In imatest we use a sophisticated interpolation routine to determine the ellipse parameters for color test charts. This is quite reliable since the gamut of the original color set extends well beyond the gamut of the widely-used sRGB color space (the standard of Windows and the internet), as well as most other color spaces used in imaging. At least 10 of the original colors are outside the sRGB gamut. sRGB is used for all examples in this post.

The ellipses are a visual indicator of the magnitude of perceived color (chroma) difference. The longer the ellipse axis, the greater the distance on the a*b* plane for a given color difference.

Here are the MacAdam ellipses for the X-Rite Colorchecker, in xy (from xyY) and u’v’ (from Lu’v’) representations, displayed in Multicharts. u’v’ is supposed to be more perceptually uniform than xy, and that is not evident inside the sRGB gamut. This may be one reason why u’v’ hasn’t gained much traction in the imaging industry.

xy Colorchecker MacAdam ellipses, magnified 10X |

xy Colorchecker MacAdam ellipses, magnified 10X |

The MacAdam ellipses are not widely used in imaging (though they seem to have traction in LED lighting). Instead, color differences based on CIELAB (L*a*b*) color space, which was developed in 1976 with the intent of being much more perceptually uniform than xyY, are used. Color differences are usually presented as ΔEab, ΔE94, or ΔE00. In all ΔE (color difference) equations, the Luminance (L*) term can be easily removed, leaving chroma differences ΔC, which can be displayed in in two dimensions.

Color difference ellipses in Imatest

All Imatest modules that can produce two-dimensional color different plots can display color difference ellipses. Colorcheck, SFRplus, and eSFR ISO display them in the a*b* plot. Multicharts and Multitest display them in a*b*, xy, u’v’, Vectorscope, and CbCr plots. In addition to MacAdam ellipses, which are not generally used for color difference measurements (but are interesting for comparison), the following color difference metrics are presented. The illustrations below are for a Colorchecker (shown above) analyzed in Multicharts.

ΔCab (plain ΔC – 1976)

ΔCab is simple geometric distance in the a*b* plane of CIELAB (L*a*b*) color space. When CIELAB was designed, the intent was that ΔC = 1 would correspond to one Just Noticeable Difference (JND). This may hold for colors with very low chroma (a*2+b*2)1/2, but it fails badly as chroma increases, which is why ΔE94 and ΔE00 were developed. ΔEab and ΔCab are familiar and often mentioned in imaging literature, but they should never be used for important measurements.

a*b* Colorchecker ΔCab ellipses (circles!), magnified 4X |

xy Colorchecker ΔCab ellipses, magnified 4X, zoomed in |

ΔC ellipses are always show magnified 4X (10X would be too large for clear display). We show the xy plot zoomed in so more detail is visible.

ΔC94 (ΔC 1994)

ΔE94 was developed to compensate for the deficiencies of ΔEab. Circles become ellipses with their major axes aligned with the radius from the origin (a* = b* = 0). It is more more accurate than ΔEab, especially for strongly chromatic colors.

a*b* Colorchecker ΔC94 ellipses, a*b* Colorchecker ΔC94 ellipses,magnified 4X |

xy Colorchecker ΔC94 ellipses, magnified 4X, zoomed in |

ΔC00 (ΔC 2000)

ΔE00 was developed as a further refinement over ΔE94. It has an extremely complex equation. Though not perfect, it’s the best of the current color difference formulas, and is recommend when color differences need to be quantified. It’s quite close to ΔE94, except for some blue colors. Until we added these ellipses to Imatest, there was no convenient way to visually compare the different ΔE measurements!

a*b* Colorchecker ΔC00 ellipses, a*b* Colorchecker ΔC00 ellipses,magnified 4X |

xy Colorchecker ΔC00 ellipses, xy Colorchecker ΔC00 ellipses,magnified 4X, zoomed in |

Displaying the ellipses in Imatest

In Imatest 4.2 the ellipses are not displayed by default. We may change this in a later version. The table below shows how to control ellipse display.

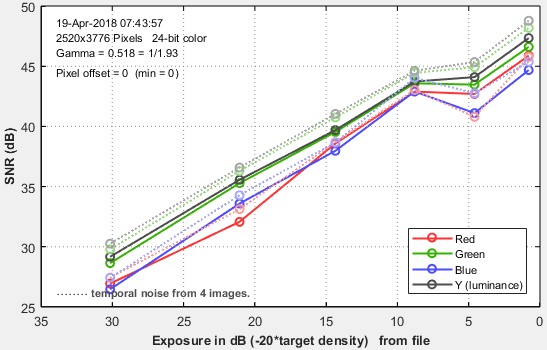

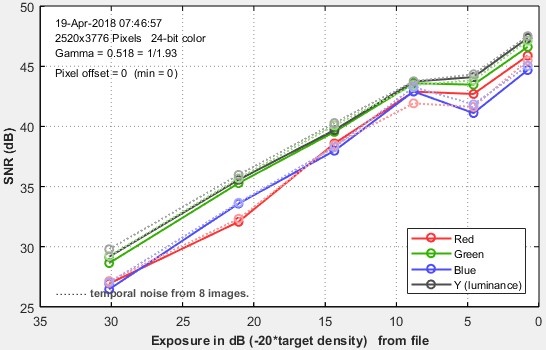

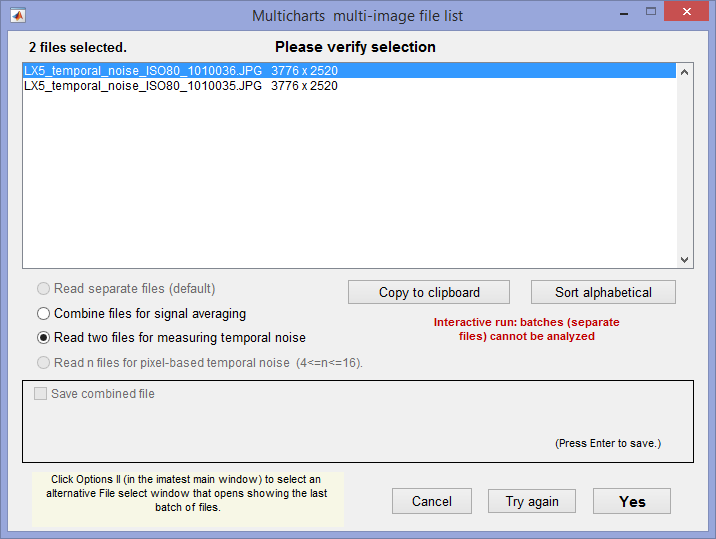

Measuring temporal noise

Temporal noise is random noise that varies independently from image to image, in contrast to fixed-pattern noise, which remains consistent (but may be difficult to measure because it is usually much weaker than temporal noise). It can be analyzed by Colorcheck and Stepchart and was added to Multicharts and Multitest in Imatest 5.1.

It can be calculated by two methods.

- the difference between two identical test chart images (the Imatest recommended method), and

- the ISO 15739-based method, which where it is calculated from the pixel difference between the average of N identical images (N ≥ 8) and each individual image.

In this post we compare the two methods and show why method 1 is preferred.

(1) Two file difference method. In any of the modules, read two images. The window shown on the right appears. Select the Read two files for measuring temporal noise radio button.

The two files will be read and their difference (which cancels fixed pattern noise) is taken. Since these images are independent, noise powers add. For indendent images I1 and I2, temporal noise is

\(\displaystyle \sigma_{temporal} = \frac{\sigma(I_1 – I_2)}{\sqrt{2}}\)

In Multicharts and Multitest temporal noise is displayed as dotted lines in Noise analysis plots 1-3 (simple noise, S/N, and SNR (dB)).

(2) Multiple file method. From ISO 15739, sections 6.2.4, 6.2.5, and Appendix A.1.4. Available in Multicharts and Multitest. Currently we are using simple noise (not yet scene-referred noise). Select between 4 and 16 files. In the multi-image file list window (shown above) select Read n files for temporal noise. Temporal noise is calculated for each pixel j using

\(\displaystyle \sigma_{diff}(j) = \sqrt{ \frac{1}{N} \sum_{i=1}^N (X_{j,i} – X_{AVG,j})^2} = \sqrt{ \frac{1}{N} \sum_{i=1}^N X_{j,i}^2 – \left(\frac{1}{N} \sum_{i=1}^N X_{j,i}\right)^2 } \)

The latter expression is used in the actual calculation since only two arrays, \(\sum X_{j,i} \text{ and } \sum X_{j,i}^2 \), need to be saved. Since N is a relatively small number (between 4 and 16, with 8 recommended), it must be corrected using formulas for sample standard deviation from Identities and mathematical properties in the Wikipedia standard deviation page as well as Equation (13) from ISO 15739. \(s(X) = \sqrt{\frac{N}{N-1}} \sqrt{E[(X – E(X))^2]}\).

\(\sigma_{temporal} = \sigma_{diff} \sqrt{\frac{N}{N-1}} \)

We currently recommend the difference method (1) because our experience so far has shown no advantage to method (2), which requires many more images (N ≥ 8 recommended), but allows fixed pattern noise to be calculated at the same time.

To calculate temporal noise with either method, read the appropriate number of files (2 or 4-16) then push the appropriate radio button on the multi-image settings box.

Multi-image settings window, showing setting for method 1.

Multi-image settings window, showing setting for method 1.

if 4-16 images are enterred, the setting for method 2 (Read n files…) will be available.

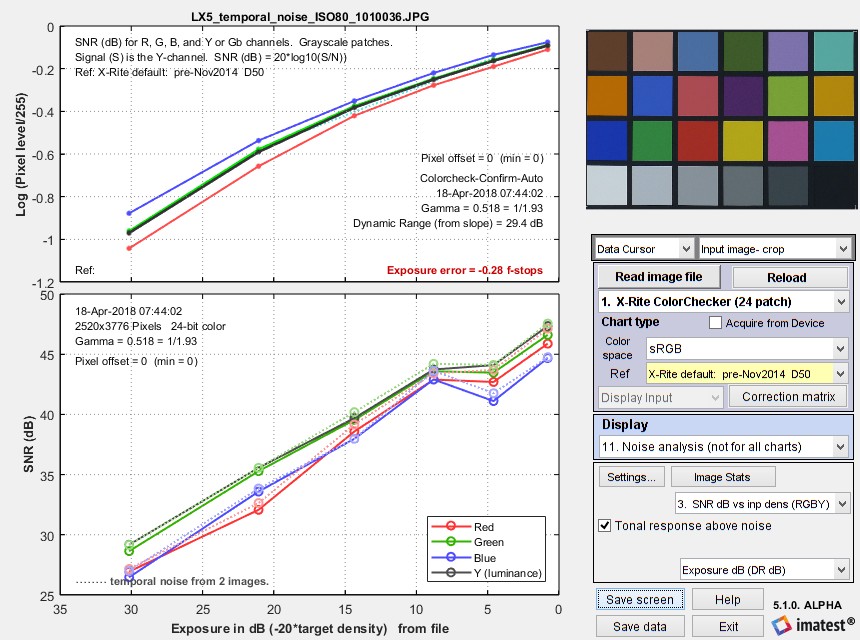

Results for the two methods

The two methods were compared using identical Colorchecker images taken on a Panasonic Lumix LX5 camera (a moderately high quality small-sensor camera now several years old).

Difference method (1) (two files)

Here are the Multicharts results for 2 files.

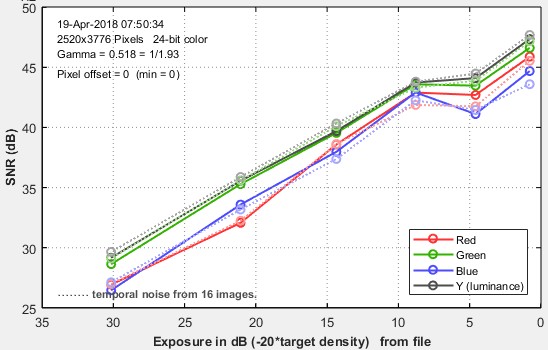

Multicharts SNR results for temporal noise, shown as thin dotted lines in the lower plot

Multicharts SNR results for temporal noise, shown as thin dotted lines in the lower plot

Multi-file method (2) (4-16 files)

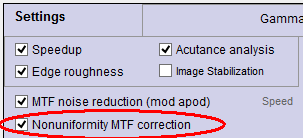

Correcting nonuniformity in slanted-edge MTF measurements

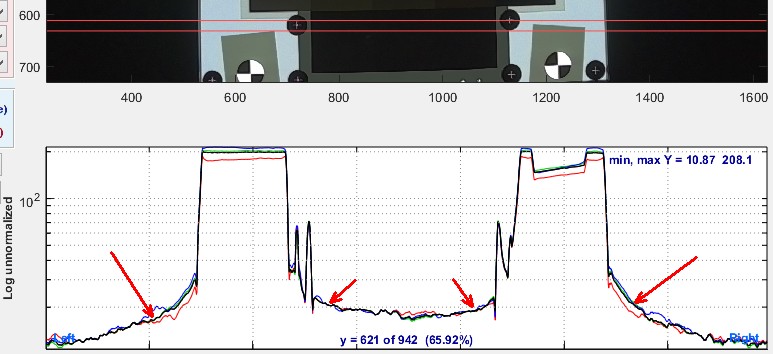

Slanted-edge regions can often have non-uniformity across them. This could be caused by uneven illumination, lens falloff, and photoresponse nonuniformity (PRNU) of the sensor.

Uncorrected nonuniformity in a slanted-edge region of interest can lead to an irregularity in MTF at low spatial frequencies. This disrupts the low-frequency reference which used to normalize the MTF curve. If the direction of the nonuniformity goes against the slanted edge transition from light to dark, MTF increases. If the nonuniformity goes in the same direction as the transition from light to dark, MTF decreases.

To demonstrate this effect, we start with a simulated uniform slanted edge with some blur applied.

Then we apply a simulated nonuniformity to the edge at different angles relative to the edge. This is modeled to match a severe case of nonuniformity reported by one of our customers:

Here is the MTF obtained from the nonuniform slanted edges:

If the nonuniformity includes an angular component that is parallel to the edge, this adds a sawtooth pattern to the spatial domain, which manifests as high-frequency spikes in the frequency domain. This is caused by the binning algorithm which projects brighter or darker parts of the ROI into alternating bins.

Compensating for the effects of nonuniformity

Although every effort should be made to achieve even illumination, it’s not always possible (for example, in medical endoscopes and wide-FoV lenses).

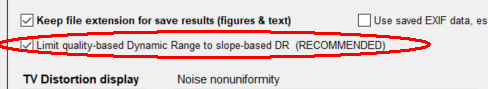

Imatest 4.5+ has an option for dealing with this problem for all slanted-edge modules (SFR and Rescharts/fixed modules SFRplus, eSFR ISO, SFRreg, and Checkerboard). It is applied by checking the “Nonuniformity MTF correction” checkbox in the settings (or “More” settings) window, shown on the right.

Imatest 4.5+ has an option for dealing with this problem for all slanted-edge modules (SFR and Rescharts/fixed modules SFRplus, eSFR ISO, SFRreg, and Checkerboard). It is applied by checking the “Nonuniformity MTF correction” checkbox in the settings (or “More” settings) window, shown on the right.

When this box is checked, a portion of the spatial curve on the light side of the transition (displayed on the right in Imatest) is used to estimate the nonuniformity. The light side is chosen because it has a much better Signal-to-Noise Ratio than the dark side. In the above image, this would be the portion of the the edge profile more than about 6 pixels from the center. Imatest finds the first-order fit to the curve in this region, limits the fit so it doesn’t drop below zero, then divides the average edge by the first-order fit.

The applied compensation flattens the response across the edge function and significantly improves the stability of the MTF:

Summary

For this example, Imatest’s nonuniformity correction reduces our example’s -26.0% to +22.8% change in MTF down to a -3.5% to +4.7% change. This is an 83% reduction in the effect of the worst cases of nonuniformity.

MTF50 versus nonuniformity angle without [blue] and with [orange] nonuniformity correction

MTF50 versus nonuniformity angle without [blue] and with [orange] nonuniformity correction

While this is a large improvement, the residual effects of nonuniformity remain undesirable. Because of this, we recommend turning on your ISP’s nonuniformity correction before performing edge-SFR tests or averaging the MTF obtained from nearby slanted edges with opposite transition directions relative to the nonuniformity to reduce the effects of nonuniformity on your MTF measurements further.

Detailed algorithm

We assume that the illumination of the chart in the Region of Interest (ROI) approximates a first-order function, L(d) = k1 + k2d, where d is the horizontal or vertical distance nearly perpendicular to the (slanted) edge. The procedure consists of estimating k1 and k2, then dividing the linearized average edge by L(d).

k1 and k2, are estimated using the light side of the transition starting at a sufficient distance dN from the transition center xcenter, so the transition itself does not have much effect on the k1 and k2 estimate. To find dN we first find the 20% width d20 of the line spread function (LSF; the derivative of the edge), i.e., the distance between the points where the LSF falls to 20% of its maximum value.

dN = xcenter + 2 d20

If the edge response for x > dN has a sufficient number of points, it is used to calculate k1 and k2 using standard polynomial fitting techniques. The result is a more accurate representation of the edge with the effects of nonuniformity reduced.

Future work

- Consider the 2D nonuniformity across the ROI before sampling the 1D average edge

- Use an image of a flat-field to perform nonuniformity correction within Imatest

- Consider the impact of noise which was not included in this study

- Incorporate enhancements to the slanted-edge algorithms into future revisions of ISO 12233

For any questions on how to do this, or how we can help you with your projects, contact us at support@imatest.com.

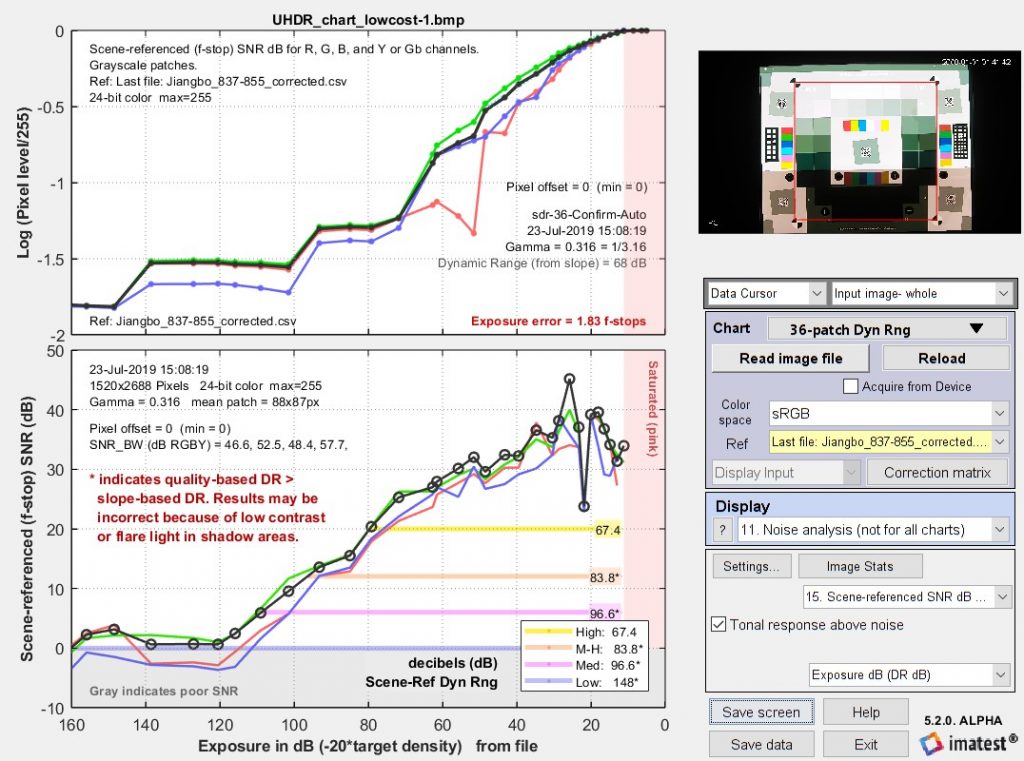

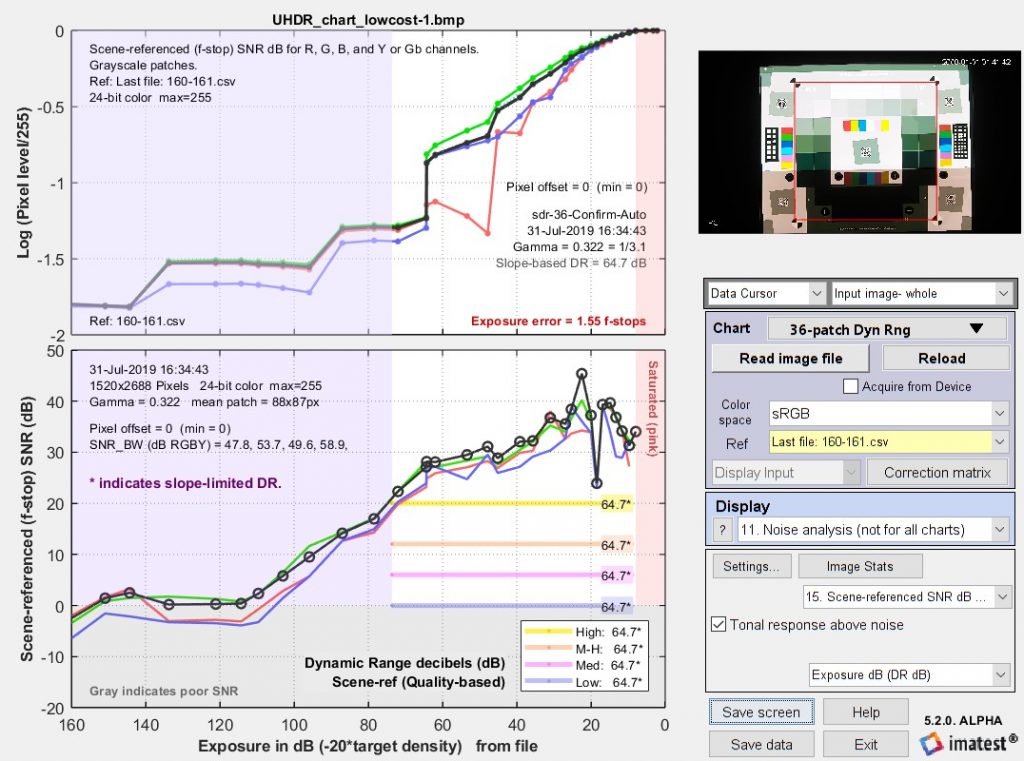

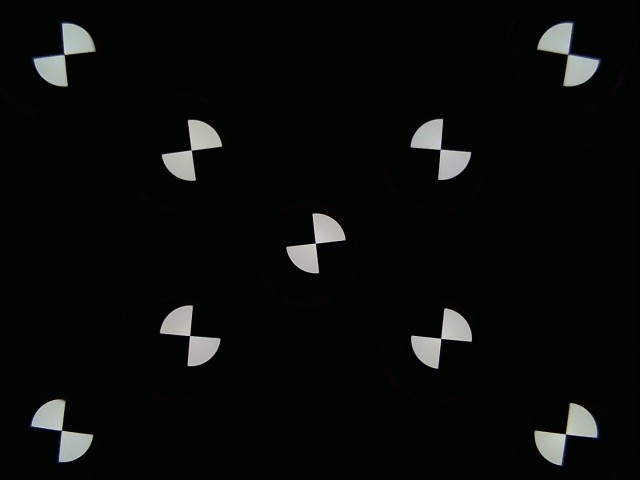

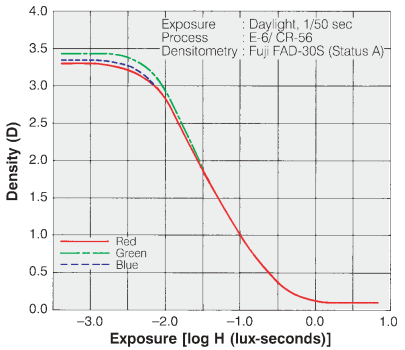

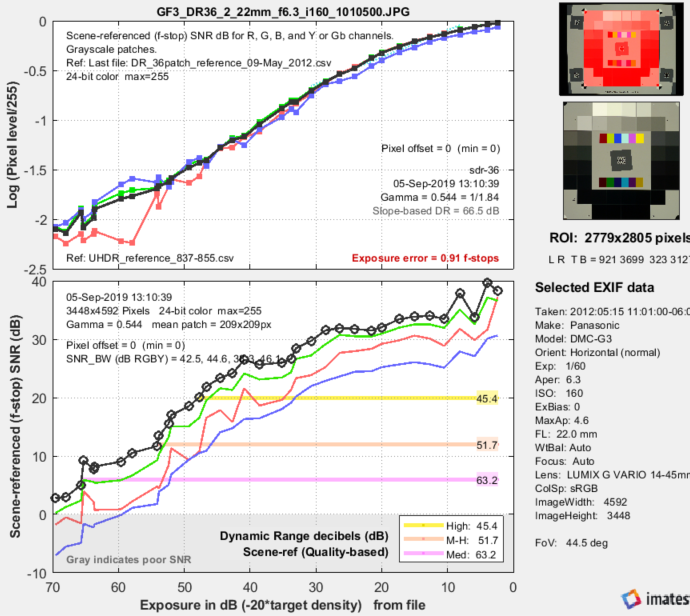

Making Dynamic Range measurements robust against flare light

Introduction

A camera’s Dynamic Range (DR) is the range of tones in a scene that can be reproduced with adequate contrast and good Signal-to-Noise Ratio (SNR). Camera Dynamic Range is often limited by flare light, which is stray light in the image, primarily caused by reflections between lens elements. Flare light reduces DR by fogging images, i.e., washing out detail in dark areas. It is the primary reason that the Dynamic Range of cameras (which include lenses) is poorer than that of image sensors, which can be up to 150dB (30 million:1) for recent HDR (High Dynamic Range) sensors.

In the past two years we’ve learned that flare light in test chart images could, in theory, be mistaken for the image of the test chart, resulting in exaggerated Dynamic Range measurements. The situation became real in mid-2019, when we started seeing actual images where this happened. By studying these images we have developed techniques to guard against exaggerated SNR measurements caused by flare light. We describe these techniques here.

We recognize that some customers may actually prefer exaggerated measurements because they yield very high Dynamic Range numbers— sometimes over 120dB, which approach the specifications of HDR sensors, and look good in marketing materials. The reduced (but realistic) DR measurements obtained when the effects of flare light are removed, may make some of these customers uncomfortable. We will do our best to deal with their objections.

The effects of flare light

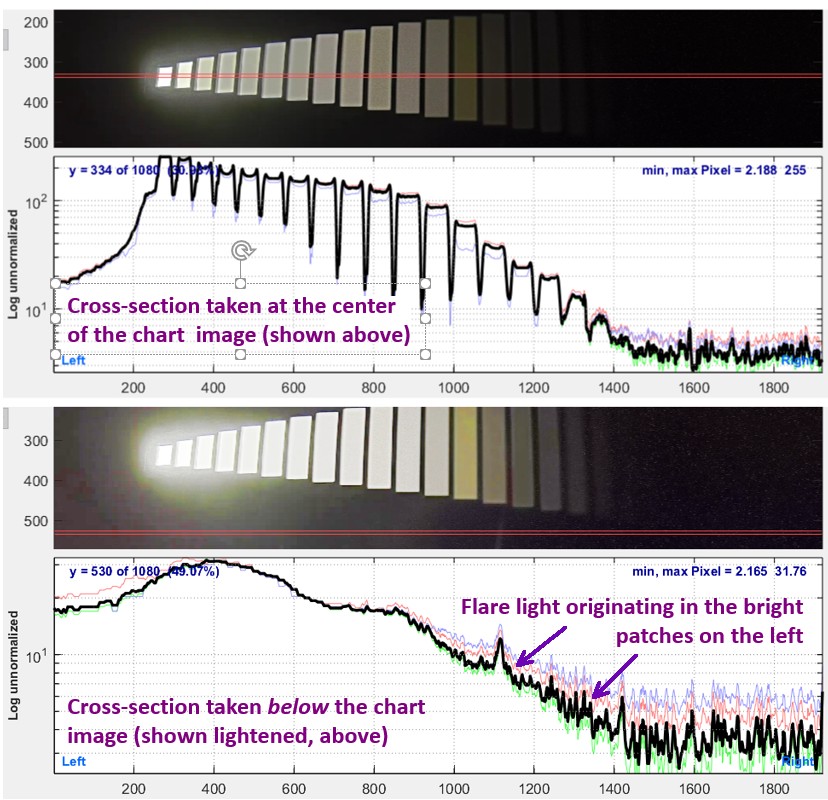

Flare light can be illustrated with an image of the XYLA chart— a precision HDR test chart with a linear configuration, consisting of 21 grayscale patches with Optical Density steps of 0.3. The image is from a low-cost “black box” camera.

The upper cross-section plot, made with the Image Statistics module, is taken at the center of the XYLA image. Flare is most obvious in the image and as a decay in the cross-section plot to the left of the brightest patch.

XYLA image (from the same image; the lower is lightened) and corresponding cross-section plots

XYLA image (from the same image; the lower is lightened) and corresponding cross-section plots

The lower cross-section was taken outside (below) the active chart image, which is shown lightened above to make flare light more visible. The variation in pixel level on the right side of the chart (x between 800 and 1600) is caused by flare light diffusing from the brightest patches on the left.

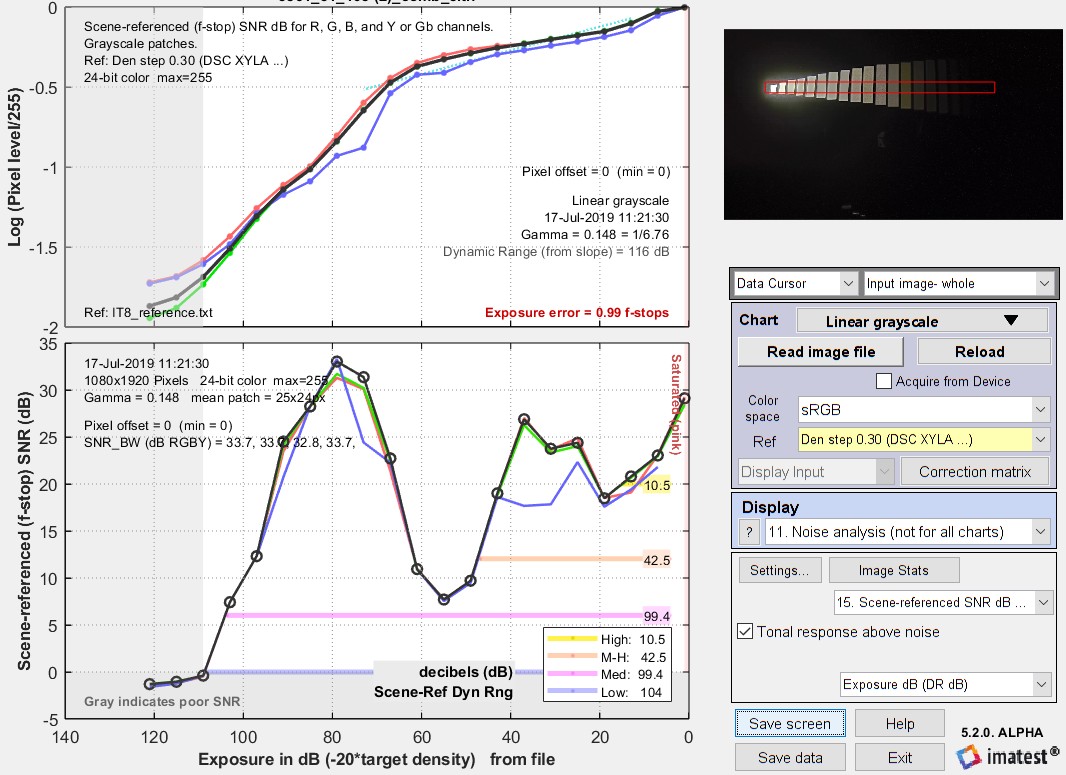

Results of the XYLA image (above) showing strong tone mapping. Click on the image to view full-sized. Some notes on this image. This image has very strong local tone-mapping, leading to an exceptionally low (and not very meaningful) measured gamma of 0.148.The SNR varies in an unusual way: it doesn’t drop monotonically, as it would for a conventional linear image sensor. This indicates that an HDR (High Dynamic Range) image sensor with several operating regions was used. Because the image had significant barrel distortion, region selection was difficult. The Contrast Resolution chart is much easier to use and provides a better indication of system performance in the presence of tone mapping. |

If the flare light were any worse it could easily have been mistaken for a signal from the chart itself, leading to an false Dynamic Range measurement.

Flare light can be extremely complex. It can add an offset to the image (often called “veiling glare”), which is difficult to distinguish from a black level offset in the image processing pipeline. Most of the time it is largest near bright patches, then decreases with distance from these patches. The rate of decrease is rarely a well-behaved exponential.

|

Lens reflections are a major cause of medium-range flare light. An uncoated glass surface (index of refraction ≅ 1.5) reflects R = 4% = 0.04 of the light incident on it. (Remember, a sheet of glass or a lens component has two surfaces.)